As the marketing environment becomes increasingly complex, with more channels, shorter campaign cycles, and growing data volumes, the pressure on data infrastructure intensifies. On top of that, the adoption of AI adds another layer of complexity.

To meet these demands, organizations turn to two foundational methods: ETL and data integration. While they’re often mentioned interchangeably, they serve distinct purposes.

This article provides a comprehensive comparison of ETL and data integration, exploring their roles in modern marketing analytics and explaining why the most effective approach often combines both.

What Is ETL?

The ETL process

The ETL process involves three key stages:

- Extract: Data is pulled from various marketing sources, such as ad platforms, CRMs, email tools, and web analytics. This step often deals with inconsistent APIs, rate limits, and platform-specific logic, which must be normalized for analysis.

- Transform: This is where the data is cleaned, enriched, and aligned to a consistent schema. For marketing use cases, this includes mapping channel-specific metrics to standardized definitions (for example, align “spend” and “cost”), applying business rules, stitching user journeys, and preparing data for further analysis.

- Load: The final step is loading the transformed data into a destination, typically a data warehouse or a reporting tool, where it can be queried by BI dashboards, AI agents, or custom reports.

Benefits of ETL

It's the foundation for scalable, accurate, and governed reporting. Below are the key benefits ETL brings to marketing data environments.

1. Single source of truth

One of the most valuable outcomes of a well-implemented ETL process is the creation of a single source of truth for marketing data. Rather than relying on fragmented reports from individual platforms, each with its own logic, metrics, and attribution rules, ETL centralizes and aligns all relevant data into a unified structure.

This consolidation ensures that every team, from performance marketing to executive leadership, is working from the same metrics and definitions.

2. Operational efficiency and resource savings

Manual data cleaning, normalization, and validation consume a significant amount of time, especially in multi-platform environments.

ETL automates these steps, reducing dependency on analysts for routine tasks. It frees up resources for higher-impact work, such as strategy, modeling, or optimization. Over time, this operational efficiency compounds, especially as data volume grows.

3. Improved attribution accuracy

Marketing platforms rarely speak the same language. ETL pipelines resolve differences in naming conventions, metric definitions, and data formats, transforming fragmented inputs into a unified schema. ETL stitches user journeys, resolving identity conflicts and enforcing consistent logic across platforms.

This leads to more accurate channel valuations, better budget allocation, and defensible ROI calculations.

4. Scalability for data and organizational growth

As marketing operations scale across regions, brands, or channels, data complexity increases.

ETL pipelines are designed to accommodate this growth without disrupting existing analytics workflows. New sources, rules, or taxonomies can be integrated systematically, ensuring analytics remain stable and trustworthy as the business evolves.

Popular ETL tools

ETL platforms can be general-purpose or tailored for specific business functions. Purpose-built ETL tools offer faster implementation, pre-built connectors, and workflows optimized for campaign data, attribution, and reporting.

Here are several popular ETL solutions for marketing use cases:

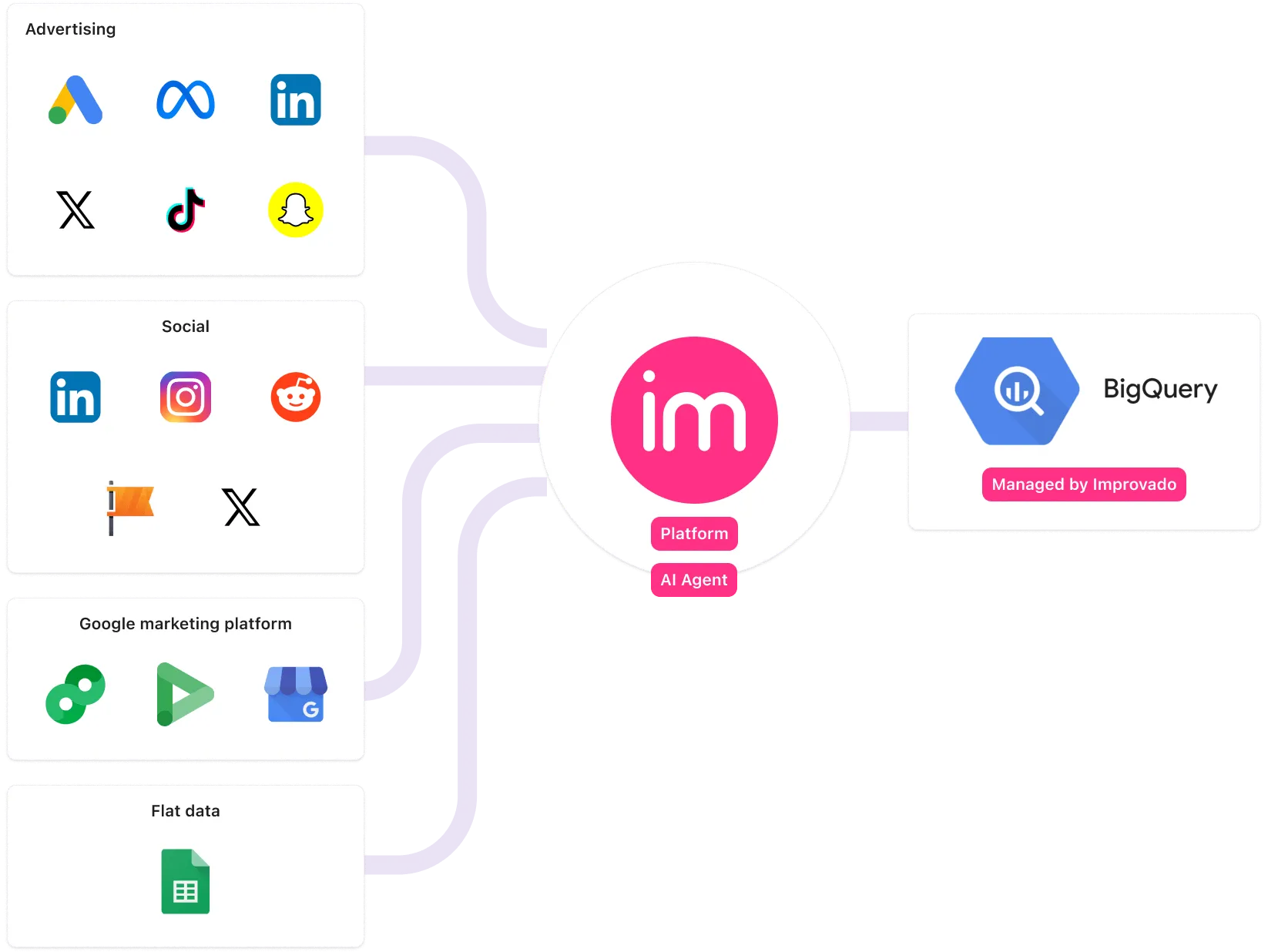

- Improvado: Marketing-specific ETL platform designed for enterprise teams and agencies. Automates data extraction, transformation, and normalization across 500+ platforms with no engineering effort. Ideal for unified reporting, attribution, and AI-powered analysis.

- Fivetran: General-purpose ETL tool with strong automation and connector coverage. Supports basic transformation logic and is widely used in broader analytics workflows, though it often requires additional tools for marketing-specific use cases.

- Supermetrics: Designed for marketers, offering a simple setup to pull campaign data into spreadsheets, BI tools, or warehouses. Best for small teams or lightweight reporting workflows with minimal transformation needs.

- Adverity: A data platform focused on marketing and ecommerce analytics. Offers ETL capabilities, transformation layers, and dashboards. Geared toward midsize to large organizations with moderate technical resources.

- Matillion: Cloud-native ETL tool often used with data warehouses like Snowflake or BigQuery. Not marketing-specific, but supports custom transformations and scaling through engineering-led configurations.

What Is Data Integration?

Benefits of data integration

Data integration plays a crucial role in enabling real-time access to marketing data across systems and platforms and is critical for day-to-day campaign management and operational visibility.

1. Faster access to insights

Data integration enables near real-time synchronization between marketing platforms, CRMs, and analytics tools. It enables teams to continuously monitor campaign performance without waiting for daily batch processes.

When optimizing media spend or testing new creatives, speed to insight can be the difference between a wasted budget and a well-timed adjustment.

2. Streamlined connectivity across tools

Modern marketing stacks are fragmented by design. Ad platforms, email tools, analytics suites, and sales systems all operate independently.

Data integration addresses this by connecting these tools through APIs, middleware, or connectors, allowing data to flow seamlessly without manual effort.

This streamlines workflows and reduces the need for copy-paste reporting or manual data enrichment.

3. Flexibility for lightweight use cases

Data integration is ideal for use cases that don’t require extensive transformation, such as syncing campaign data to a dashboard, passing CRM events to a reporting tool, or triggering actions between platforms.

It gives teams visibility and automation without the complexity of full-scale data modeling. For quick stakeholder mapping or relationship diagrams that inform data models, Canva’s genogram maker helps teams visualize structures before formalizing them in ETL schemas.

Additionally, data integration is typically faster to deploy. Unlike ETL, which often requires custom transformation rules and warehouse infrastructure, plug-and-play connectors or native integrations reduce setup time, making it easier to build initial dashboards or enable self-service analytics.

Popular data integration tools

Data integration is a broad term that covers everything from simple API connections to full ETL pipelines. As a result, many platforms fall under the umbrella of “data integration tools.”

Here are several widely used data integration tools in marketing environments:

- Improvado: While primarily an ETL platform, Improvado also functions as a marketing-focused integration layer with hundreds of prebuilt connectors. It centralizes schema management and ensures consistent data delivery across reporting and BI tools.

- Zapier: A no-code automation platform that connects marketing tools and triggers workflows between them. Great for small tasks, such as syncing leads from forms to CRMs, but not suited for heavy analytics or structured data reporting.

- Segment: Customer data platform with real-time event streaming and integration across marketing, product, and analytics tools. Ideal for unifying user behavior data and activating it across platforms.

- Stitch: Lightweight data integration tool focused on simplicity and speed. Offers a range of connectors and basic transformation options but lacks in-depth support for marketing-specific metrics or complex data preparation.

- Tray.io: Low-code automation and integration platform used to connect marketing, sales, and ops tools. Offers more flexibility than Zapier, but typically requires more configuration and some technical knowledge.

Key Differences between ETL and Data Integration

While ETL and data integration overlap in centralizing data, they serve distinct roles, and the choice between them can significantly impact marketing analytics, data reliability, and attribution accuracy.

| Aspect | ETL | Data Integration |

|---|---|---|

| Scope | Purpose-built for batch processing, standardization, and preparing data for analytics. | Refers to broader strategies for unifying data, including bringing together fragmented data streams without enforcing extensive transformation logic up front. |

| Data Transformation | Always involves a transformation step to enforce schema, quality, and consistency before loading. | May or may not include substantial transformation. Data may be integrated as is. |

| Timing | Typically batch-oriented: data is extracted and loaded in scheduled batches (e.g., daily or hourly). | Often real-time or near-real-time, especially for operational integrations (e.g., sync data as events happen). |

| Destinations | Primarily loads data into central data stores like data warehouses, data marts, or lakes. | Can deliver data to various destinations: a data warehouse, directly into applications, dashboards, or data lakes. |

| Data Volume | Optimized for large-scale data consolidation. | Designed to handle anything from small, frequent updates to large-scale streaming data. It all depends on the integration solution. |

| Complexity | Often requires data engineering expertise to set up and maintain, especially for complex transformations or custom workflows. | Many data integration tasks can be configured by analysts or operations staff using no-code integration platforms. |

In summary, ETL focuses on structured batch data preparation, whereas data integration is an overarching concept that encompasses ETL alongside other methods to unify data.

ETL is typically employed for heavy-duty data preparation that feeds into analytics systems, while data integration encompasses everything from analytical pipelines to real-time application integrations.

Choosing the right approach: ETL vs. data integration

ETL and direct data integration are complementary: many organizations use ETL processes to feed their analytical databases and use integration tools to sync data between operational systems.

In many cases, you will use both, but certain scenarios favor one approach over the other, so let's look at some of the examples.

| Marketing Analytics Scenario | ETL | Direct Data Integration |

|---|---|---|

| Multi-touch attribution modeling across channels | ✅ Required for standardizing source logic, aligning time windows, and de-duplicating user journeys | ⚠ Raw data often inconsistent across platforms; may lead to inaccurate attribution |

| Cross-platform campaign performance reporting (e.g., Facebook vs. Google Ads) | ✅ Enables normalized metrics (e.g., unified CPC/ROAS), clean taxonomy, and platform reconciliation | ⚠ Metric definitions vary by platform; direct API pulls may misalign key KPIs |

| Real-time budget pacing and spend monitoring | ⚠ Batch delays may limit intra-day responsiveness | ✅ Near real-time visibility via API sync; ideal for active campaign oversight |

| Data warehouse as single source of truth | ✅ ETL centralizes and governs all data transformations before loading | ⚠ Data is often scattered across platforms and lacks clear tracking or consistency |

| Integration with AI agents or natural language query tools | ✅ Provides clean, structured data for accurate answers | ⚠ Raw or inconsistent inputs may reduce reliability of AI-generated responses |

| Custom business logic for KPI definitions (e.g., paid media ROI, funnel stage attribution) | ✅ Transformation layer handles rule-based logic at scale | ⚠ Logic often needs to be re-applied downstream or managed manually |

| Marketing mix modeling and predictive forecasting | ✅ Requires high-integrity historical data and consistent metric definitions | ⚠ Incomplete or inconsistent data lowers model accuracy |

| Self-service dashboards for leadership and cross-functional teams | ✅ Structured, governed data enables scalable and trustworthy reporting | ⚠ Risk of misinterpretation or conflicting metrics without centralized transformation |

| Speed to initial implementation or MVP | ⚠ Requires more upfront planning and engineering resources | ✅ Fast to deploy with prebuilt connectors and minimal setup |

| Attribution in privacy-restricted or cookie-constrained environments | ✅ ETL supports enrichment and identity resolution from multiple sources | ⚠ May lack stitching logic for cross-device or anonymous touchpoints |

ETL and data integration simply represent different strategic approaches:

- ETL supports attribution correctness, cross-channel comparisons, historical modeling, and any scenario where data quality cannot be compromised.

- Choose a data integration approach when speed and flexibility are top priorities, and when you need data to flow between systems continuously, though with potential risks to accuracy.

Future Trends in Data Integration and ETL

As AI becomes more deeply embedded in marketing operations, it raises fundamental questions about the quality, accessibility, and structure of the data feeding those systems. This is putting new pressure on how marketing data is integrated, transformed, and operationalized.

Both short and long-term data integration and ETL trends reflect the changing nature of marketing workflows and the new demands of marketing organizations.

1. Real-time data integration growth

One major trend is the convergence of ETL and real-time integration. Traditional batch ETL processes are evolving into more flexible frameworks that allow for streaming data ingestion, incremental updates, and near real-time transformation.

This hybrid model helps organizations meet both governance needs and the demand for immediacy, supporting use cases such as anomaly detection, pacing alerts, and AI-driven performance summaries.

In the long term, real-time integration is set to become the default.

2. AI-powered integration and automation

Another key development is the rise of automated and AI-powered ETLs. AI is already helping marketing data teams manage growing complexity by automating tasks like data mapping, transformation, and anomaly detection.

In the long term, AI-driven integration could minimize human intervention altogether in many data workflows.

This means marketing analysts could rely on intelligent systems to adjust ETL logic as schemas change or new data sources are added, without writing code or submitting IT or support tickets.

3. The shift toward composable data stacks

One of the most significant shifts in marketing data infrastructure is the move toward composable data stacks, where ETL, reverse ETL, integration, observability, and AI tooling can be mixed and matched.

Unlike monolithic platforms that bundle ETL, storage, reporting, and activation into a single solution, composable stacks allow teams to select best-in-class tools for each stage of the data lifecycle: ETL, reverse ETL, integration, observability, modeling, and AI.

Composable stacks also enable faster iteration cycles. For example, a team might use a dedicated ETL tool to bring normalized data into a warehouse, pair it with a reverse ETL tool to push clean data back into ad platforms for activation, and overlay AI agents for real-time performance diagnostics. This unbundled approach accelerates the adoption of new capabilities without being locked into legacy vendor limitations.

4. Self-healing data pipelines

As marketing data pipelines grow more complex, even minor pipeline failures, such as API schema changes or broken transformations, can derail reporting.

To address this, a growing trend is the development of self-healing data pipelines that can detect, diagnose, and remediate issues automatically.

These systems use observability frameworks, anomaly detection, and rule-based logic to monitor data flow in real-time. When errors occur, such as missing fields, delayed syncs, or corrupted values, the pipeline can trigger alerts, apply predefined fallbacks, or rerun specific jobs without human intervention.

In the near future, we’ll see more AI-powered monitoring that not only detects issues but also suggests or takes corrective action.

5. Event-driven and streaming pipelines

Traditional ETL often runs on schedules, whether it is daily or hourly, but event-driven architectures turn that model upside down: pipelines trigger the moment something happens.

This architecture is particularly valuable for time-sensitive use cases such as budget pacing, anomaly detection, and campaign optimization. For example, streaming data enables teams to react immediately to overspending on a specific channel or underperformance in a newly launched campaign rather than waiting for the next daily sync.

The next few years will see event-driven integration become more standard for time-sensitive marketing processes.

Across all these trends, one theme stands out: marketing data integration is becoming faster, smarter, and safer. In the next few years, marketing teams can expect to receive real-time data streams and event-driven architectures, providing them with instant insights. Meanwhile, AI and no-code tools make data prep and integration more accessible than ever.

The Case for a Hybrid Approach

Choosing between ETL and data integration isn’t a binary decision, it’s about designing the right mix.

ETL brings the structure and consistency required for accurate attribution and long-term reporting. Data integration delivers the speed and flexibility needed for day-to-day visibility.

A hybrid approach supports both depth and agility.

Improvado simplifies the hybrid data strategy by providing a powerful ETL solution built for marketing. It automates data pipelines, standardizes metrics, and lays AI on top for faster insights.

Book a demo to see how it can support your team’s data infrastructure.

.png)

.png)