Marketing and revenue teams depend on fast, accurate access to data, yet technical users often spend more time fixing pipelines than running models. APIs change, schemas break, and siloed platforms require manual stitching, which slows analysis and undermines confidence in results. At scale, these inefficiencies block advanced analytics.

This article examines how modern data extraction frameworks and tools address these challenges, making it possible to pull structured, reliable, and analysis-ready datasets from even the most fragmented sources.

What Is Data Extraction?

Within the broader data lifecycle, data extraction bridges the messy reality of scattered, heterogeneous data and the structured realm of transformation and analysis. It may be part of an ETL (Extract, Transform, Load) flow or an ELT (Extract, Load, Transform) model, depending on whether transformation happens before or after loading the data into warehouses, lakes, or analytics environments.

Data extraction workflow includes:

- Identifying and connecting to data sources, ranging from databases and SaaS platforms to files, APIs, and web pages.

- Choosing an extraction method, such as querying, API calls, web scraping, or OCR for documents.

- Performing the extraction, either as a full dump or, more sustainably, via incremental capture of new or updated data.

- Consolidating that data into a central staging area for further cleansing, validation, or transformation downstream.

Without reliable extraction, everything that follows—transformation, analytics, reporting, cracks at the seams. It’s where accuracy, completeness, and performance need to prove themselves before anything else.

What Are the Components of Data Extraction?

A mature data extraction framework is more than just pulling records from disparate systems. It’s about engineering a repeatable, fault-tolerant process that transforms fragmented inputs into governed, analysis-ready datasets.

Each component below represents a critical control point that safeguards scalability, accuracy, and downstream reliability.

1. Source identification and connection

Discovery isn't enough; robust extraction requires engineered connectivity.

Whether the source is a relational database, SaaS API, raw log file, or unstructured PDF, the key is establishing durable, secure connectors with automated credential management and error handling.

At scale, this stage also accounts for API rate limits, schema drift, and evolving vendor dependencies.

2. Extraction method

Choosing between full and incremental extraction is all about operational efficiency and trust in data freshness.

- Full pulls provide baselines, typically used in initial loads or audits.

- Incremental extraction (via change data capture, timestamp-based deltas, or event streams) minimizes processing cost. It accelerates availability, which is essential when working with high-frequency marketing or product usage data.

3. Staging and validation

Before entering production pipelines, data must survive rigorous validation. This stage checks schema conformity, null thresholds, referential integrity, and anomaly detection (for example, unexpected spikes in spend or missing campaign IDs).

The staging layer functions as a quarantine zone, ensuring downstream transformations and models aren't contaminated by malformed or incomplete inputs.

4. Delivery to the pipeline

Validated extracts are routed to warehouses, lakes, or direct API consumers.

This is where orchestration matters, ensuring correct load order, dependency management, and retry logic. Whether the target is a Snowflake warehouse, a real-time dashboard, or an ML model, this final step ensures datasets are consistently structured, queryable, and analysis-ready.

Together, these components create a resilient data extraction backbone. They reduce operational drag, provide confidence in accuracy, and accelerate the handoff from raw source to actionable insight.

Why Is Data Extraction Important?

In enterprise environments, fragmented data isn’t just inconvenient. It’s an operational bottleneck.

Extraction serves as the control point that transforms scattered records into governed, analysis-ready datasets. Done right, it reduces noise, accelerates workflows, strengthens confidence in every decision downstream, and brings a number of other benefits.

It eliminates manual data wrangling

Manual copy-paste and ad-hoc joins across platforms are both error-prone and unscalable. Automated extraction replaces this with governed pipelines that enforce accuracy, reduce human intervention, and redirect time toward higher-value analytics and strategy.

Enables scalable, consistent data pipelines for reporting and BI

Reliable extraction ensures reporting and BI are built on repeatable, trustworthy inputs. By consolidating sources into a unified, standardized stream, extraction becomes the launchpad for ETL/ELT workflows and the foundation for dashboards that finance, marketing, and leadership teams can trust.

Powers full-funnel insights

Marketing and sales data often exist in silos—ad performance, engagement touchpoints, CRM activity, and revenue outcomes rarely align without intervention. Extraction unifies these layers, producing holistic customer-journey views that reveal attribution patterns, retention levers, and personalization opportunities that siloed analysis would obscure.

Lays the groundwork for transformation and AI

No model, transformation, or ML workflow can function without clean, reliable inputs. Extraction establishes the raw, validated base that fuels advanced analysis, whether that’s predictive modeling, real-time anomaly detection, or AI-driven campaign optimization.

Protects decision accuracy

Errors introduced at the extraction layer don’t stay isolated—they cascade.

Missing rows, delayed refreshes, or schema drift distort dashboards, skew models, and misguide strategy. A resilient extraction framework ensures accuracy and timeliness, shielding downstream stakeholders from flawed inputs.

Drives operational efficiency

Efficient extraction reduces latency, infrastructure overhead, and manual rework. By automating incremental updates and optimizing data transfer, teams can shorten refresh cycles, lower compute costs, and scale pipelines without sacrificing performance or reliability.

Techniques and Tools for Data Extraction

Different extraction approaches fit different environments. Choosing the right method depends on source type, refresh needs, and scale.

Below are the core techniques with context for when they add the most value:

- Full extraction: Pulls the entire dataset in one sweep. Most effective for one-time or infrequent operations—such as onboarding a new platform, migrating historical records, or creating a system backup for compliance. Guarantees completeness but can be resource-heavy.

- Incremental extraction: Fetches only new or modified records since the last pull. This approach minimizes load, shortens refresh cycles, and scales well for production pipelines. Commonly used for daily CRM syncs, high-volume transaction data, or continuously updated campaign logs.

- APIs: Structured endpoints offered by most SaaS and ad platforms (JSON, XML, GraphQL) enable governed and repeatable pulls. APIs provide schema stability, authentication, and documentation—ideal for integrating sources like Google Ads, Salesforce, or HubSpot into dashboards and warehouses.

- Web scraping: When no API exists, scraping extracts data directly from HTML or DOM structures. It's typically applied to competitor monitoring, product listings, or pricing intelligence. While powerful, it requires careful governance to manage volatility (layout changes, anti-bot measures, or compliance).

- File parsing and OCR: Essential for semi-structured or unstructured formats. Parsing tools and optical character recognition (OCR) can extract data from PDFs, invoices, scanned financials, or system logs. Key in industries where contracts, receipts, or archival records hold critical insights.

- Stream capture (real-time sources): Captures continuous flows from sensors, apps, or digital interactions. Applied in IoT, clickstream monitoring, or fraud detection, where immediacy matters. Enables low-latency analysis pipelines that feed anomaly detection models or real-time dashboards.

| Data extraction technique | Best use case |

|---|---|

| Full extraction | One-time or infrequent loads, such as onboarding a new data source or archiving historical datasets |

| Incremental extraction | Large, frequently updated datasets where efficiency and scalability are required (e.g., daily CRM syncs, transaction logs) |

| APIs | Reliable, structured pulls from SaaS platforms and ad tools with stable schemas (e.g., Google Ads, Salesforce) |

| Web scraping | Collecting data from sources without APIs, such as competitor pricing or product catalogs |

| File parsing and OCR | Extracting structured data from PDFs, invoices, contracts, or scanned records |

| Stream capture (real-time) | Continuous monitoring of clickstream data, IoT devices, or fraud detection pipelines |

Best Practices for Data Extraction

By applying disciplined engineering practices at the extraction layer, teams can prevent errors from cascading and enable analytics that leadership can actually act on.

Follow these data extraction best practices to streamline the process and ensure data quality:

- Define precise objectives: Anchor your pipeline design to the metrics and models that matter—whether attribution, ROI, churn, or lifecycle KPIs. Clarify the analytical outputs before choosing sources to avoid over-collecting low-value data.

- Select scalable, fault-tolerant tooling: Prioritize platforms with broad connector libraries, automated scheduling, schema handling, and robust error recovery. Enterprise-grade pipelines should be able to self-heal from API failures or schema drifts without requiring manual intervention.

- Adapt extraction strategies to the context: Full loads are optimal for initial onboarding or baseline snapshots, while incremental loads maximize efficiency and minimize strain on APIs and warehouses for ongoing syncs. Hybrid models often prove to be the best in production environments.

- Standardize with reusable templates: Codify repeatable patterns, such as campaign pipelines across multiple accounts, using templates or parameterized scripts. This accelerates onboarding of new channels and enforces consistency across the stack.

- Validate and monitor data quality continuously: Automated checks for freshness, completeness, deduplication, and anomalies are essential. Build observability into the pipeline with metrics and alerts, so issues are detected before they pollute downstream analytics.

- Apply governance at the extraction layer: Use enforced naming conventions, metadata tagging, and campaign rules to prevent inconsistencies from cascading. Early governance reduces reconciliation effort and ensures reliability at scale.

- Integrate natively with downstream systems: Streamline delivery into data warehouses, BI platforms, and ML pipelines with minimal transformations at the extraction layer. Smooth integration reduces latency between collection and insight.

- Engineer for scale and complexity: Plan for exponential growth in source variety, account structures, and data granularity. Architect pipelines with modularity and elasticity so they scale seamlessly with organizational needs.

Data Extraction and ETL

Data extraction rarely operates in isolation. It’s the entry point to a broader pipeline. Understanding its role within ETL (Extract, Transform, Load) is critical for building analytics systems that are both reliable and elastic under enterprise workloads.

ETL Defined

ETL provides a disciplined three-stage framework for turning fragmented raw inputs into consistent, analysis-ready outputs.

- Extract: Data is pulled from heterogeneous sources, including databases, SaaS APIs, log files, spreadsheets, or unstructured assets like PDFs and HTML. The challenge is handling schema drift, rate limits, and credential rotation while maintaining stable connectors.

- Transform: Once staged, data undergoes cleansing, deduplication, normalization, and enrichment. Business logic is applied here, such as unifying campaign IDs, aligning time zones, or modeling attribution windows. This step dictates downstream trust in KPIs.

- Load: The processed data is delivered into a centralized environment, commonly a data warehouse or lakehouse, where BI, AI, and predictive modeling workloads consume it without additional reconciliation.

Why This Matters in Practice

At scale, marketing and sales data come from dozens of systems with conflicting structures. Without ETL, analytics leaders face brittle dashboards, untrustworthy KPIs, and wasted time reconciling data.

Proper ETL consolidates these feeds under uniform rules, introduces validation gates, and enforces governance at ingestion.

The result: repeatable, auditable pipelines that support advanced use cases such as attribution modeling, forecasting, or customer lifetime value analysis.

The emergence of ELT

Cloud-native architectures have shifted toward ELT (Extract, Load, Transform), where raw data lands first in the warehouse and is transformed afterward with its compute power.

ELT is particularly valuable when processing high-volume granular data—like impression logs or clickstream events, where storage is cheap but transformation needs to be flexible and iterative.

Many modern stacks combine both ETL and ELT, using ETL for critical governed datasets and ELT for exploratory or machine learning workloads.

Emerging Trends in Marketing Data Extraction

The data extraction landscape is shifting faster than ever, driven by advancements in AI and real-time infrastructure. From self-healing scrapers that adapt on the fly, to tools that extract text, images, video, and audio, and AI agents that require seamless, high-quality data pipelines.

These emerging capabilities are turning extraction into a strategic difference-maker rather than a technical chore. Let’s break down the trends reshaping how organizations capture, process, and leverage data.

1. Real-time and streaming data pipelines

Batch updates are no longer enough. Real-time pipelines stream events with millisecond latency, enabling instant triggers such as cart abandonment retargeting. Event-driven architectures (APIs, webhooks) are powering this shift, combining real-time responsiveness with warehouse-based aggregation.

2. Privacy-aware data handling

With GDPR, CCPA/CPRA, and the death of third-party cookies, pipelines must enforce privacy by design. Techniques such as hashing, anonymization, and consent handling are now integrated into extraction workflows. First-party data has become the strategic core for identity and compliance.

3. Intelligent, self-adaptive pipelines

AI-powered ETL tools now detect schema drift, automatically adjust mappings, and flag anomalies in near real-time. This prevents broken dashboards, reduces manual fixes, and turns pipelines into self-healing systems that preserve data integrity for analytics and modeling.

4. No-code / Low-code ETL

Drag-and-drop ETL platforms empower marketing and analytics teams to build and manage pipelines without heavy engineering support. This reduces dependency on scarce technical talent, speeds up integration by up to 80%, and enforces consistency with prebuilt connectors.

5. Cross-platform identity resolution

As cookies vanish, identity stitching across devices and channels is becoming mainstream. Pipelines now integrate identity resolution, utilizing first-party identifiers and AI matching—to unify fragmented profiles into a single, unified customer view for personalization and ROI measurement.

6. Unified data layers and reverse ETL

Centralized warehouses/lakes act as the single source of truth. Reverse ETL pushes curated data back into activation systems (ads, CRM, email). This creates a closed loop where insights don't just sit in dashboards; they directly fuel campaigns and customer engagement.

How Improvado Streamlines Data Extraction

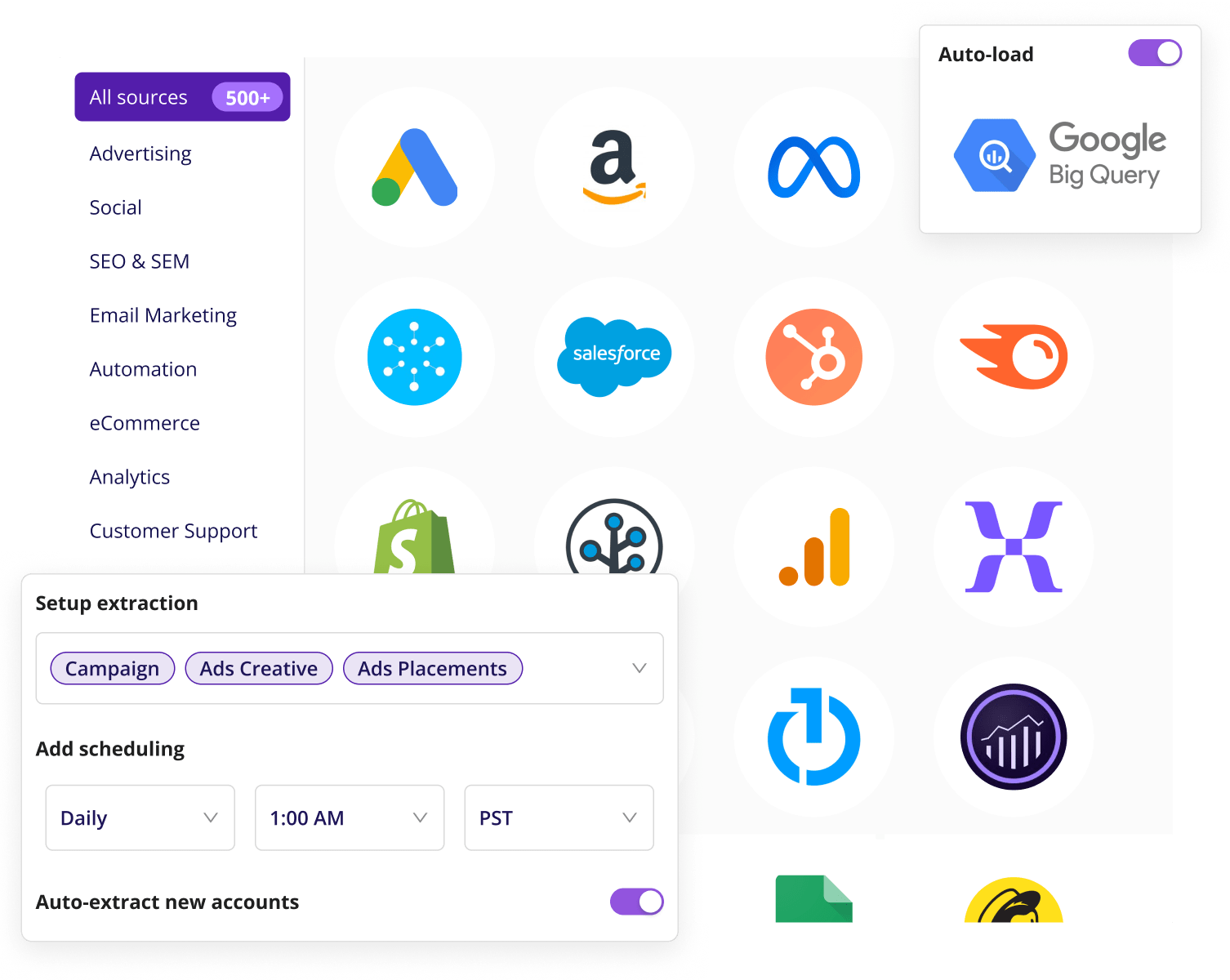

Improvado is built for teams that need high-volume, analysis-ready marketing data without the need for engineering overhead.

It connects to 500+ sources, including ad platforms, CRMs, spreadsheets, and flat files, while abstracting away the complexity of APIs.

The platform extracts 46,000+ metrics and dimensions across unlimited accounts, preserving full granularity for downstream modeling and attribution. Data specialists can rely on normalized, schema-consistent datasets that eliminate manual wrangling and reduce time-to-insight.

Pipeline resilience is designed in: automated error handling, flexible sync schedules (including hourly refreshes), and reusable templates that replicate workflows across accounts without reconfiguration. This enables seamless scaling of integrations across regions, campaigns, or business units.

Improvado also aligns with enterprise governance standards. Features like campaign rule enforcement, anomaly detection, and data freshness monitoring are paired with SOC 2, GDPR, HIPAA, and CCPA compliance, ensuring datasets are both trustworthy and compliant.

Once data is loaded, analysts can push it directly to warehouses, BI tools, or AI workflows, with governance and auditability intact.

.png)

.png)