Successfully leveraging the ocean of marketing data requires more than just access to it. Data scattered across ad platforms, CRMs, analytics tools, and warehouses creates silos that obscure insights and slow decision-making. Data integration bridges these gaps, connecting, transforming, and unifying data from every source into a single, reliable foundation for analysis.

This article breaks down what data integration means for modern marketing organizations: how it works, the key technologies behind it, and why it’s critical for achieving cross-channel visibility, accurate reporting, and scalable growth.

Key Takeaways

- Unified View: Data integration combines data from various sources to create a single source of truth, eliminating inconsistencies and data silos.

- Core Process: The process typically involves extracting data from source systems, transforming it into a consistent format, and loading it into a target system like a data warehouse.

- Key Methods: Common integration methods include ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), API integration, and real-time data streaming.

- Business Benefits: Effective data integration enhances decision-making, improves data quality, increases operational efficiency, and supports advanced analytics and AI.

- Common Challenges: Organizations often face challenges with managing diverse data formats, ensuring data quality, integrating legacy systems, and maintaining security.

- Role of Tools: Data integration platforms automate the complex process of connecting different systems, saving time and resources while ensuring data accuracy and scalability.

What Is Data Integration?

In practice, this means taking information from separate applications, databases, and files, each with its own structure and format, and making it work together.

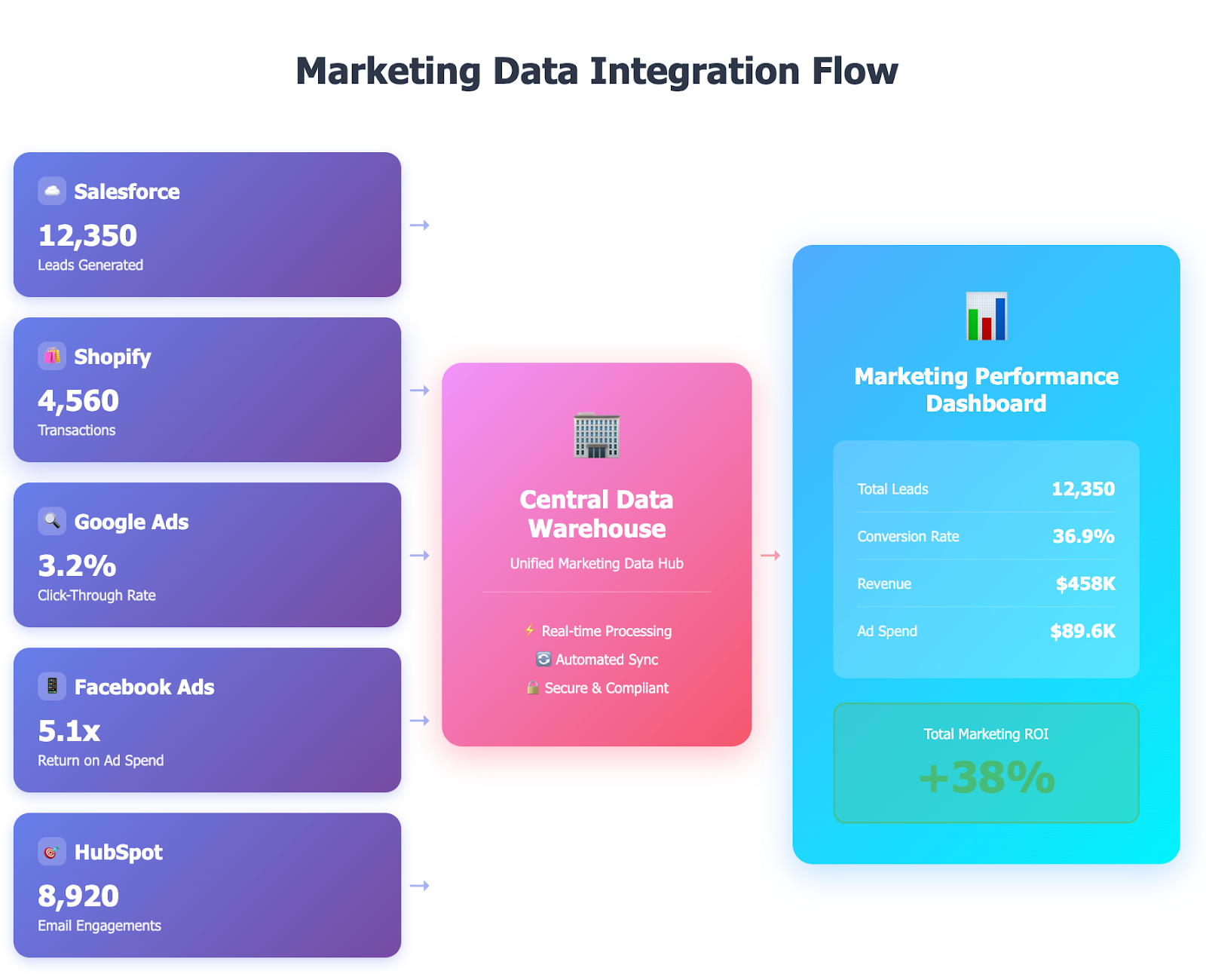

For an enterprise, this could involve merging customer data from a Salesforce CRM, transaction data from an e-commerce platform like Shopify, and campaign data from Google Ads and Facebook Ads. The result is a complete picture that no single source system could provide on its own. By breaking down data silos, integration empowers organizations to make more informed, data-driven decisions.

How Does Data Integration Work?

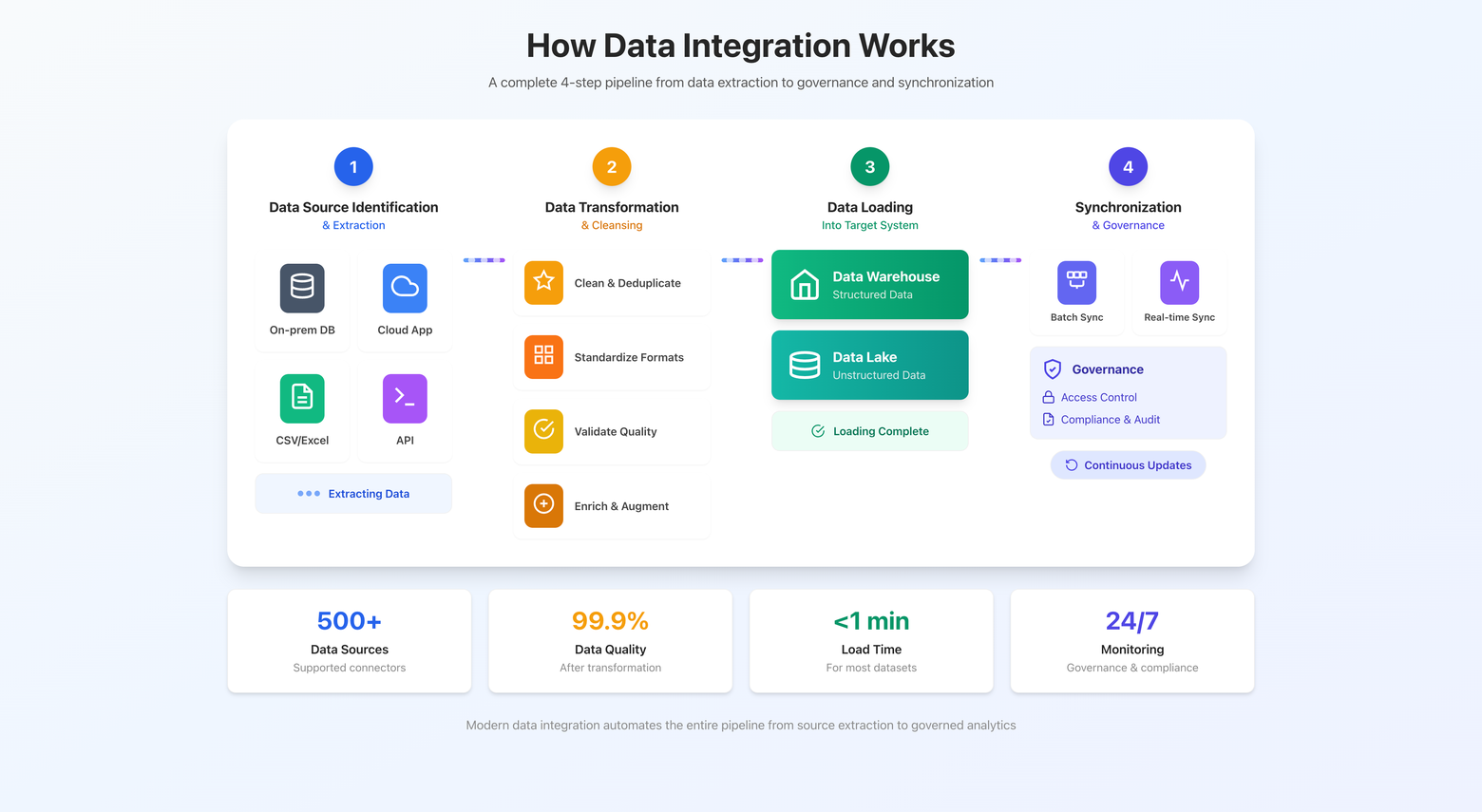

The data integration process can be broken down into four fundamental steps, forming a data pipeline that moves information from its origin to a destination where it can be analyzed.

Step 1: Data Source Identification and Extraction

The first step is to identify all the relevant data sources, which can range from on-premises databases and cloud-based applications to flat files and third-party APIs.

Once identified, the data ingestion process begins.

This involves extracting or retrieving the raw data from these various sources. The extraction method can vary depending on the source system, from simple file exports to complex API calls.

Step 2: Data Transformation and Cleansing

Raw data from different sources is rarely consistent. It often contains errors, duplicates, and formatting inconsistencies. The transformation stage is where this data is cleaned, standardized, and enriched to ensure high data quality.

Common transformation processes include:

- Cleansing: Correcting inaccuracies and removing duplicate records.

- Standardizing: Converting data into a consistent format (e.g., unifying date formats like "10/16/2026" and "Oct 16, 2026").

- Validating: Ensuring data adheres to business rules and constraints.

- Enriching: Augmenting the data with information from other sources to add context.

Step 3: Data Loading into a Target System

After transformation, the processed data is loaded into a central target system. This destination is typically a data warehouse (for structured, analysis-ready data) or a data lake (for storing vast amounts of raw, unstructured data).

This consolidated repository serves as the single source of truth for the entire organization, enabling consistent reporting and analysis.

Step 4: Data Synchronization and Governance

Data integration is not a one-time event. Source systems are constantly updated, so a robust integration strategy includes mechanisms for ongoing synchronization. This can be done in batches (e.g., daily updates) or in real-time.

Additionally, data governance policies are applied to manage data access, security, and compliance, ensuring the integrated data remains reliable and secure over time.

The Benefits of Data Integration for Your Business

Implementing a comprehensive data integration strategy provides significant competitive advantages and operational improvements.

Creates a Single Source of Truth

By consolidating data from multiple sources, integration establishes a single, authoritative view of business information. This eliminates discrepancies between departments and ensures everyone is working from the same up-to-date dataset, leading to more aligned strategies and decisions.

Enhances Decision-Making and Business Intelligence

With access to a unified and accurate dataset, leaders can gain a holistic view of business performance. This enables more sophisticated business intelligence (BI), allowing analysts to identify trends, spot opportunities, and make strategic decisions with confidence.

Increases Operational Efficiency

Automating the flow of data between different systems eliminates the need for manual data entry and reconciliation, freeing up employees to focus on high-value tasks. This streamlines workflows, reduces human error, and accelerates business processes across the organization.

Improves Data Quality and Consistency

The transformation and cleansing steps inherent in data integration significantly enhance data quality. By standardizing formats, removing duplicates, and validating information, businesses can trust the accuracy and reliability of their data, which is crucial for building accurate reports and predictive models.

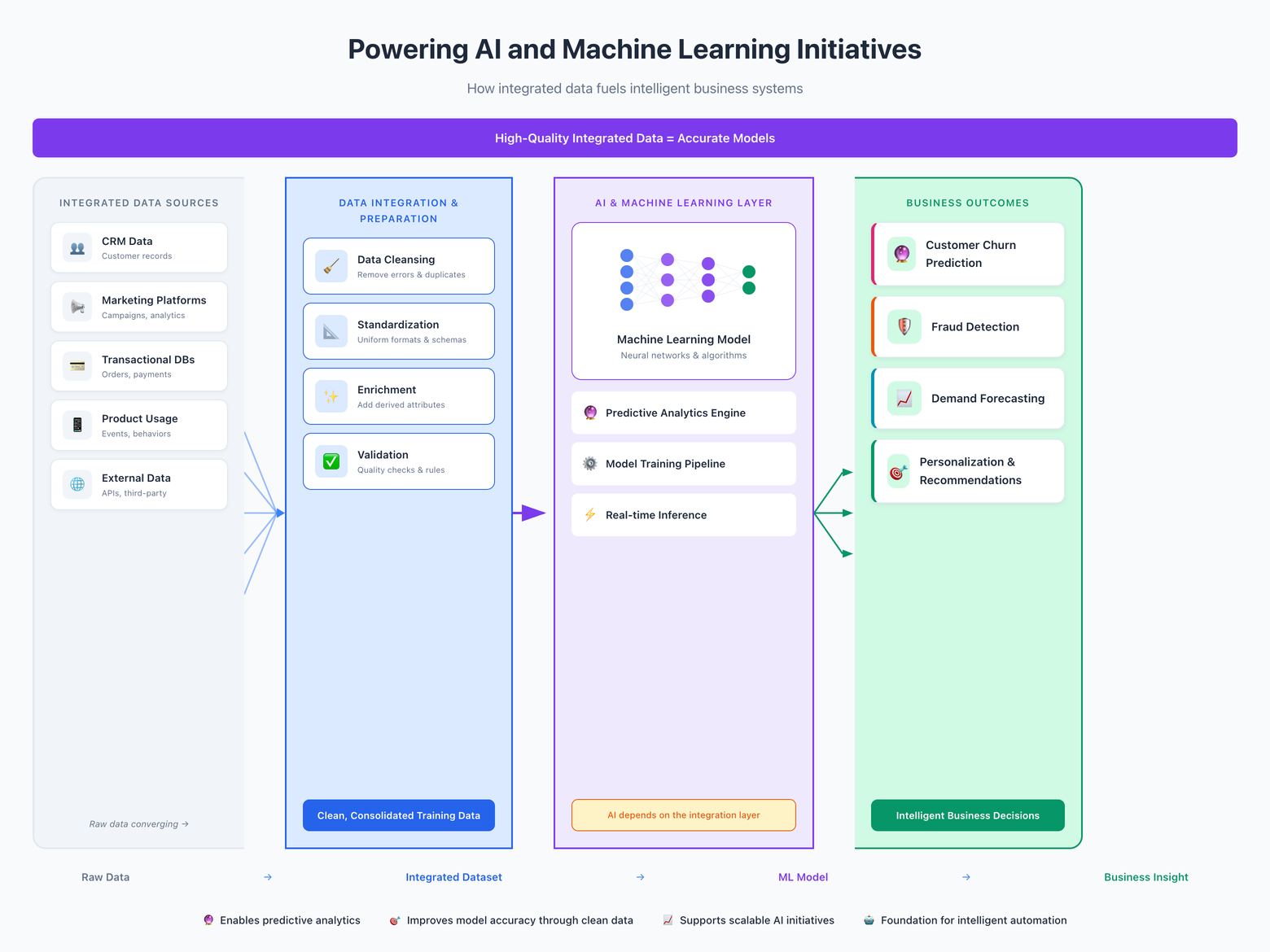

Supports Advanced Analytics, AI, and Machine Learning

Large-scale, integrated datasets are the fuel for modern analytics. A well-structured data foundation is essential for training accurate machine learning models, powering AI-driven applications, and performing complex predictive analytics that can uncover hidden patterns and forecast future outcomes.

Common Data Integration Methods and Approaches

Modern marketing and analytics teams rely on multiple systems – ad platforms, CRMs, BI tools, and warehouses. To unify insights, they use data integration methods that move, synchronize, or virtually connect data across environments.

Each approach has its strengths depending on scale, latency needs, and data governance requirements.

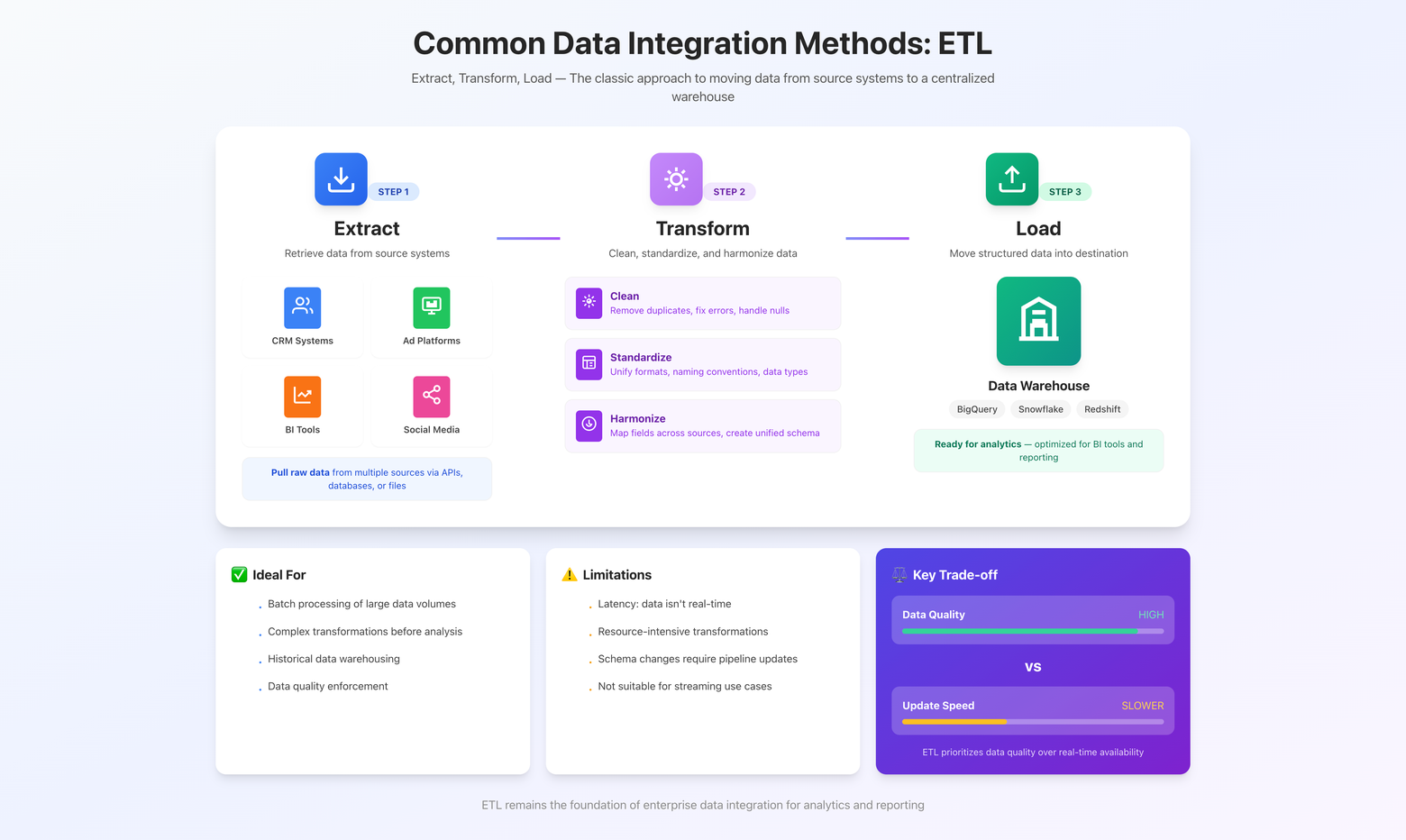

1. ETL (Extract, Transform, Load)

ETL is the classic approach to data integration. Data is:

- Extracted from source systems

- Transformed on a processing server

- Loaded into a data warehouse

This method ensures data quality and structure before analysis, making it ideal for:

- Complex transformations and schema harmonization

- Regulated industries requiring data validation before storage

- On-premises or hybrid data environments

However, ETL can be slower for massive or continuously updated datasets, since transformations happen before loading.

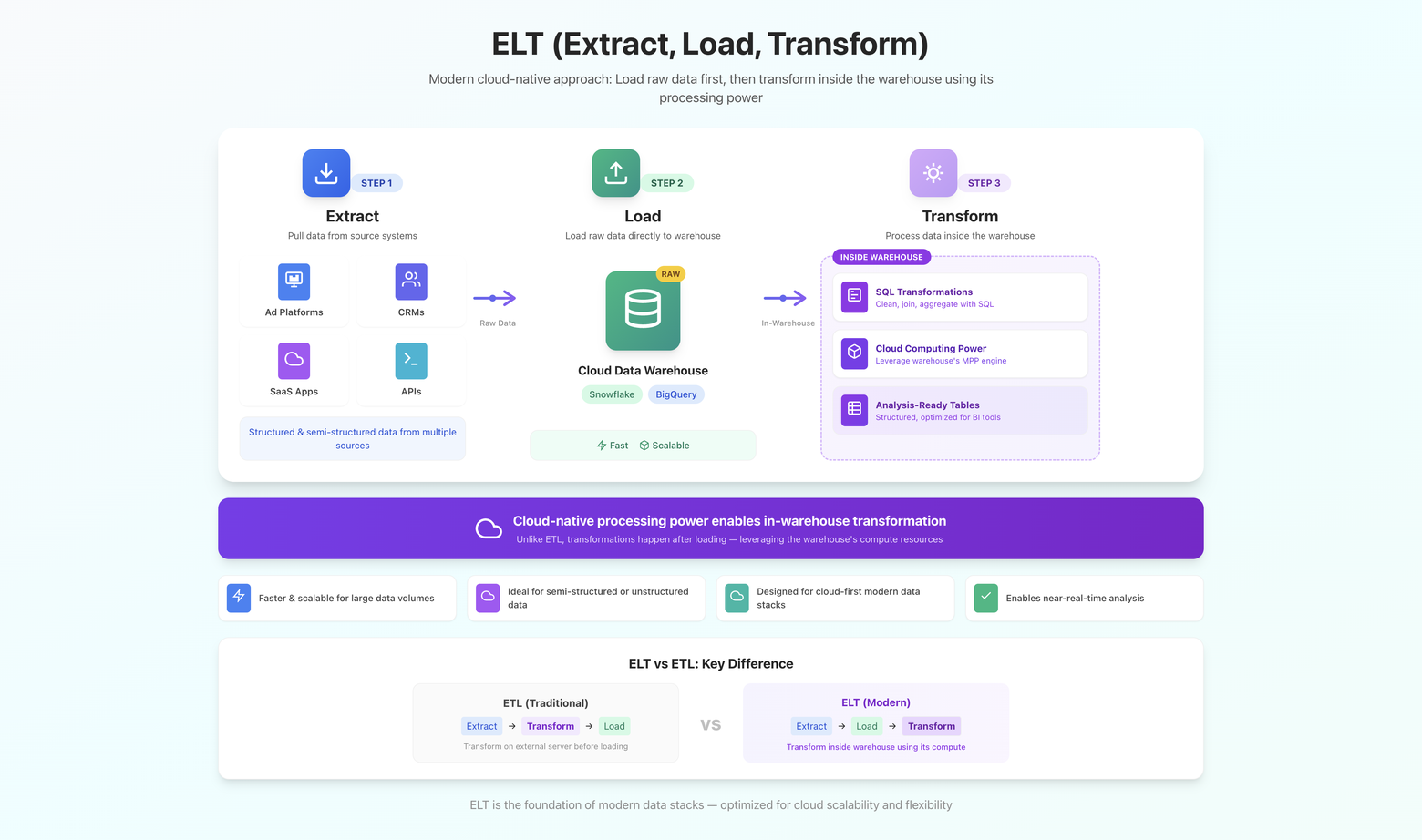

2. ELT (Extract, Load, Transform)

ELT reverses the last two steps of ETL, leveraging cloud-native warehouse processing power. Data is:

- Extracted from sources

- Loaded directly into the target system (e.g., Snowflake, BigQuery)

- Transformed within that environment

This approach is faster, scalable, and cost-efficient for:

- Large volumes of semi-structured or unstructured data

- Cloud-first organizations using SQL-based transformations

- Near-real-time analysis in modern data stacks

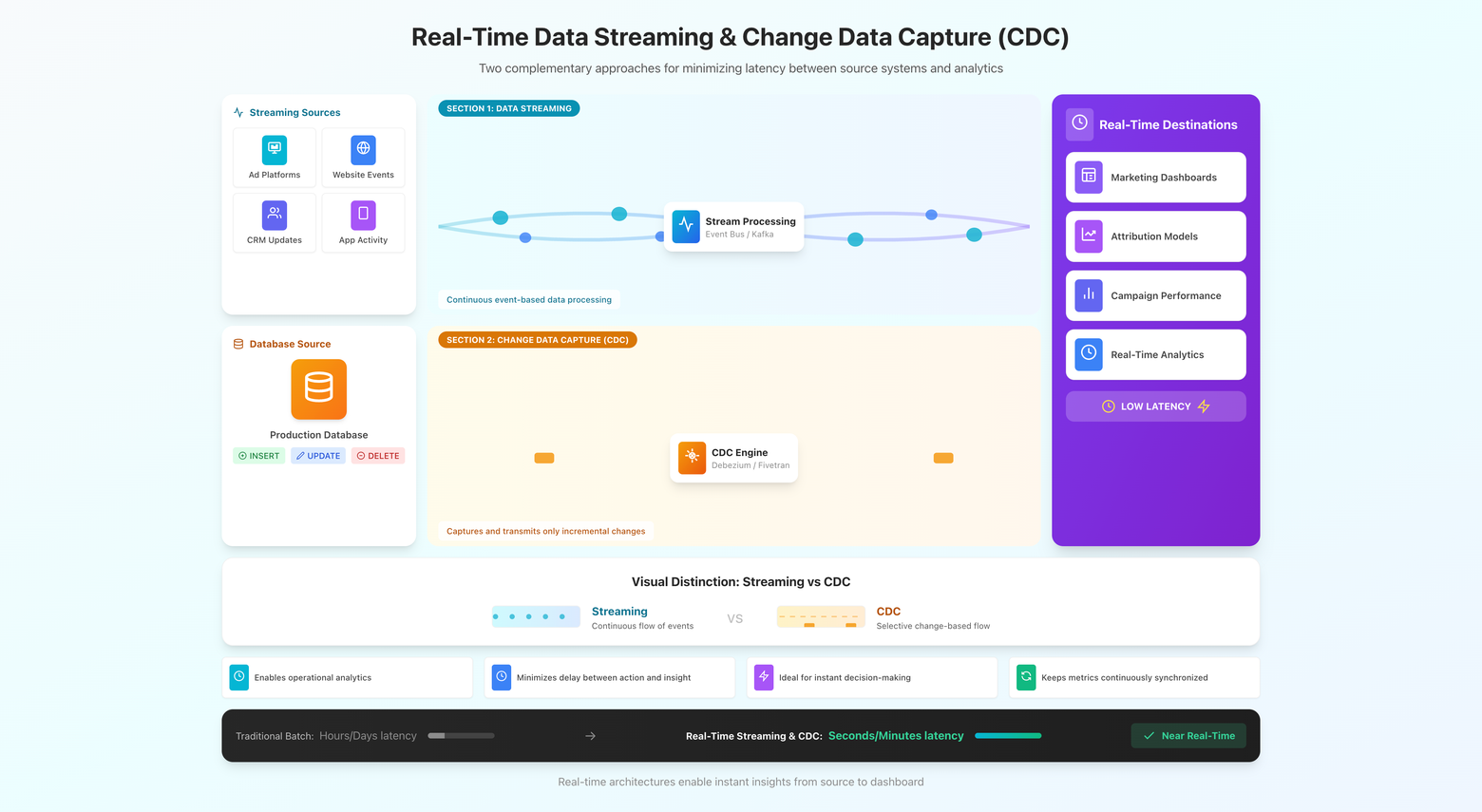

3. Real-Time Data Streaming & Change Data Capture (CDC)

For operational analytics or instant decision-making, real-time integration is essential.

Two main techniques dominate:

- Data streaming – continuously processes and transmits data as events occur.

- Change Data Capture (CDC) – identifies changes in a source database and sends only those updates downstream.

These methods keep marketing dashboards, attribution models, and campaign performance metrics synchronized in real time, minimizing latency between action and insight.

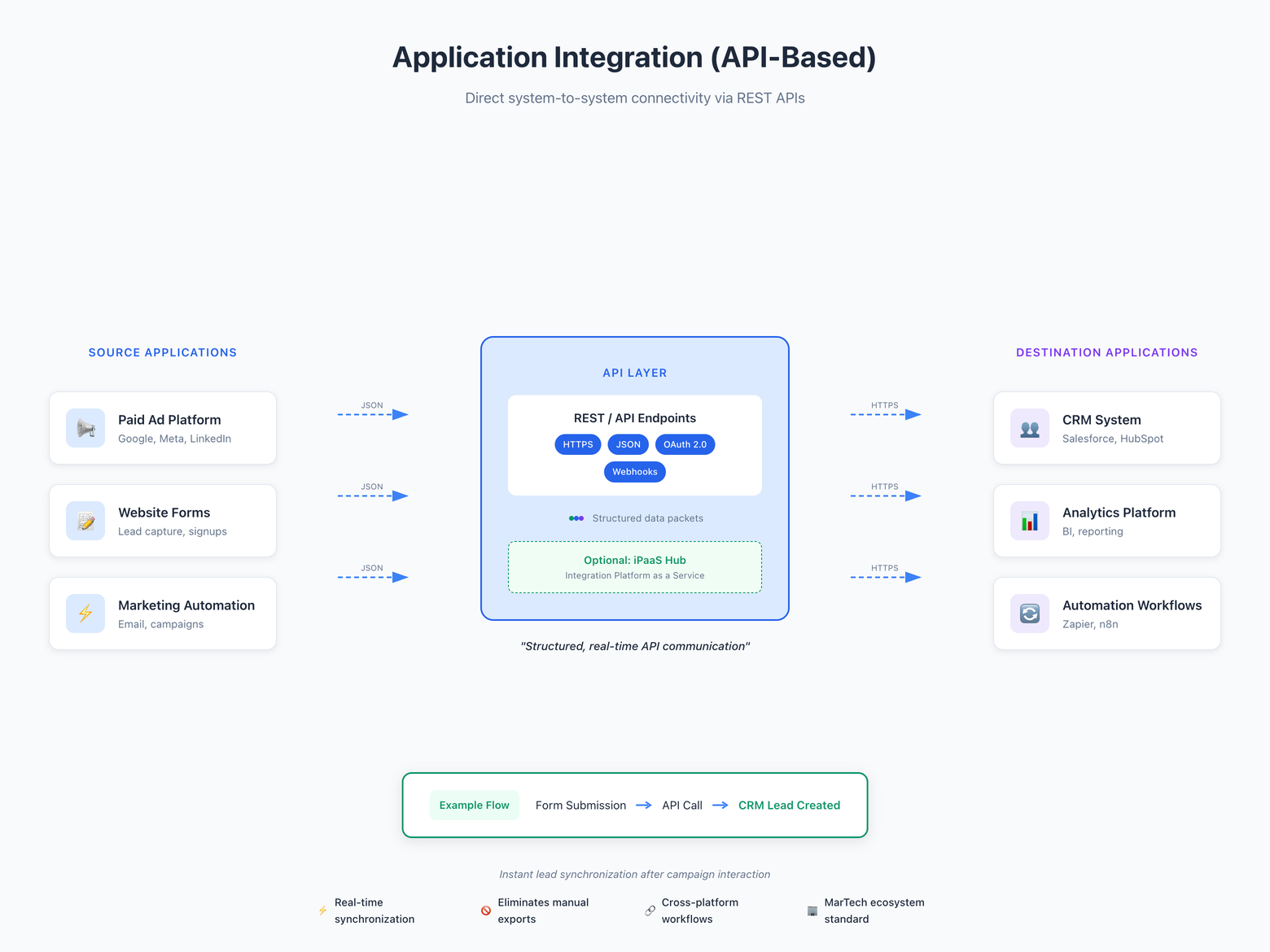

4. Application Integration (API-Based)

API integrations connect applications directly – allowing instant, structured data exchange.

Common in marketing tech, this method supports:

- Real-time sync between CRMs, ad platforms, and automation tools

- Cross-platform workflows without manual exports

- Integration via iPaaS (Integration Platform as a Service) solutions

For example, an API integration might update CRM leads instantly after a form submission in a paid campaign platform.

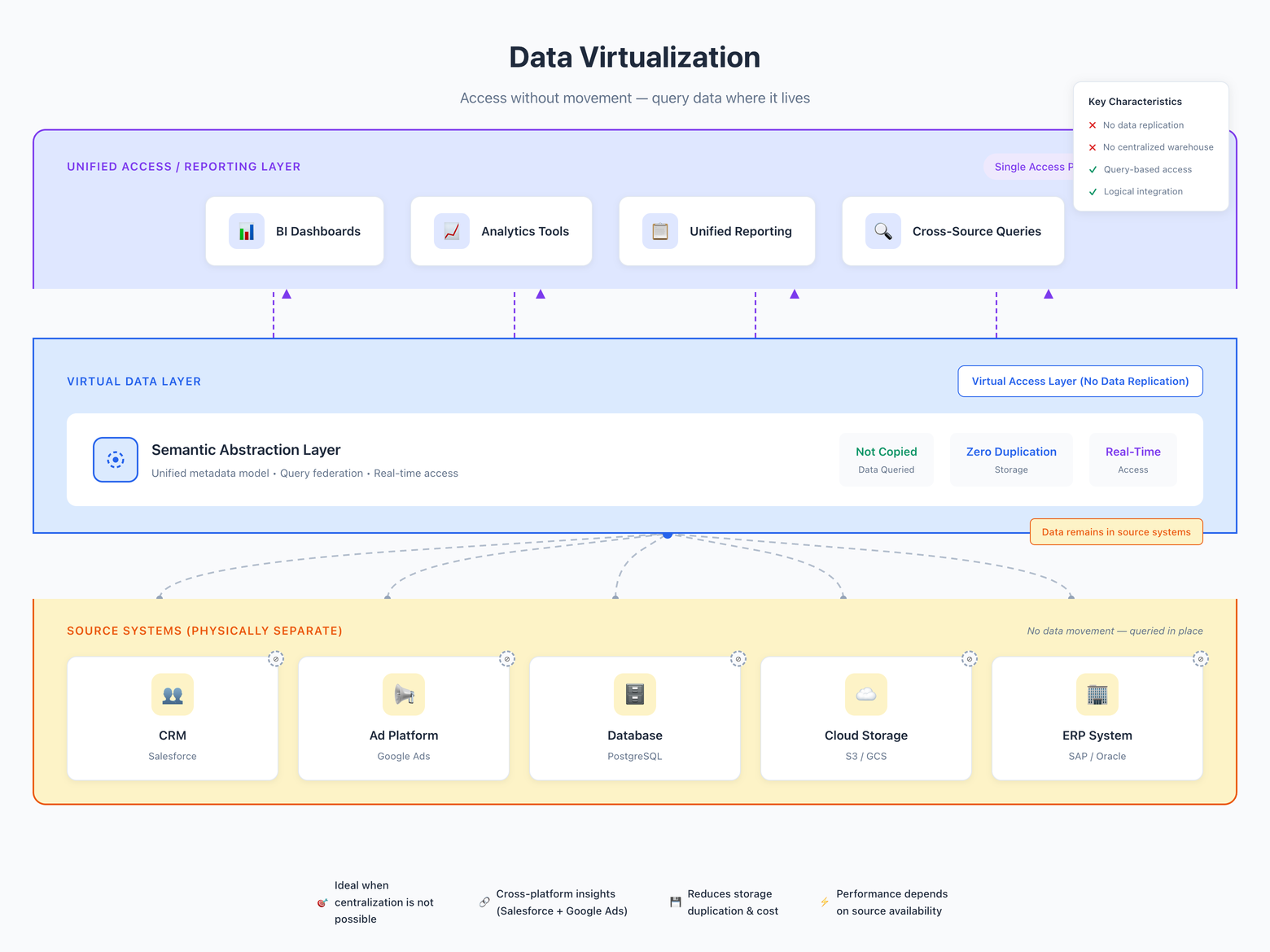

5. Data Virtualization

Data virtualization diagram showing a semantic abstraction layer that enables cross-source queries without copying data from CRM, ads, databases, and cloud storage.

Data virtualization provides access to multiple data sources through a single virtual layer – without copying or physically moving data.

It’s ideal for:

- Teams that need unified reporting but can’t centralize data

- Quick insights across disparate sources (e.g., Salesforce + Google Ads)

- Environments with strict storage or compliance constraints

While it reduces replication and cost, performance depends heavily on the underlying data systems.

Key Data Integration Use Cases

Data integration is the backbone of many critical business functions and strategic initiatives.

Data Warehousing and Data Lake Development

The most fundamental use case is populating a central data warehouse or data lake. By integrating data from all corners of the business, companies create a centralized repository for historical and current data, which becomes the foundation for all analytics and reporting activities.

Powering AI and Machine Learning Initiatives

AI and machine learning models require vast amounts of high-quality, integrated data for training. Data integration provides the clean, consolidated datasets needed to build predictive models for everything from customer churn prediction to fraud detection and demand forecasting.

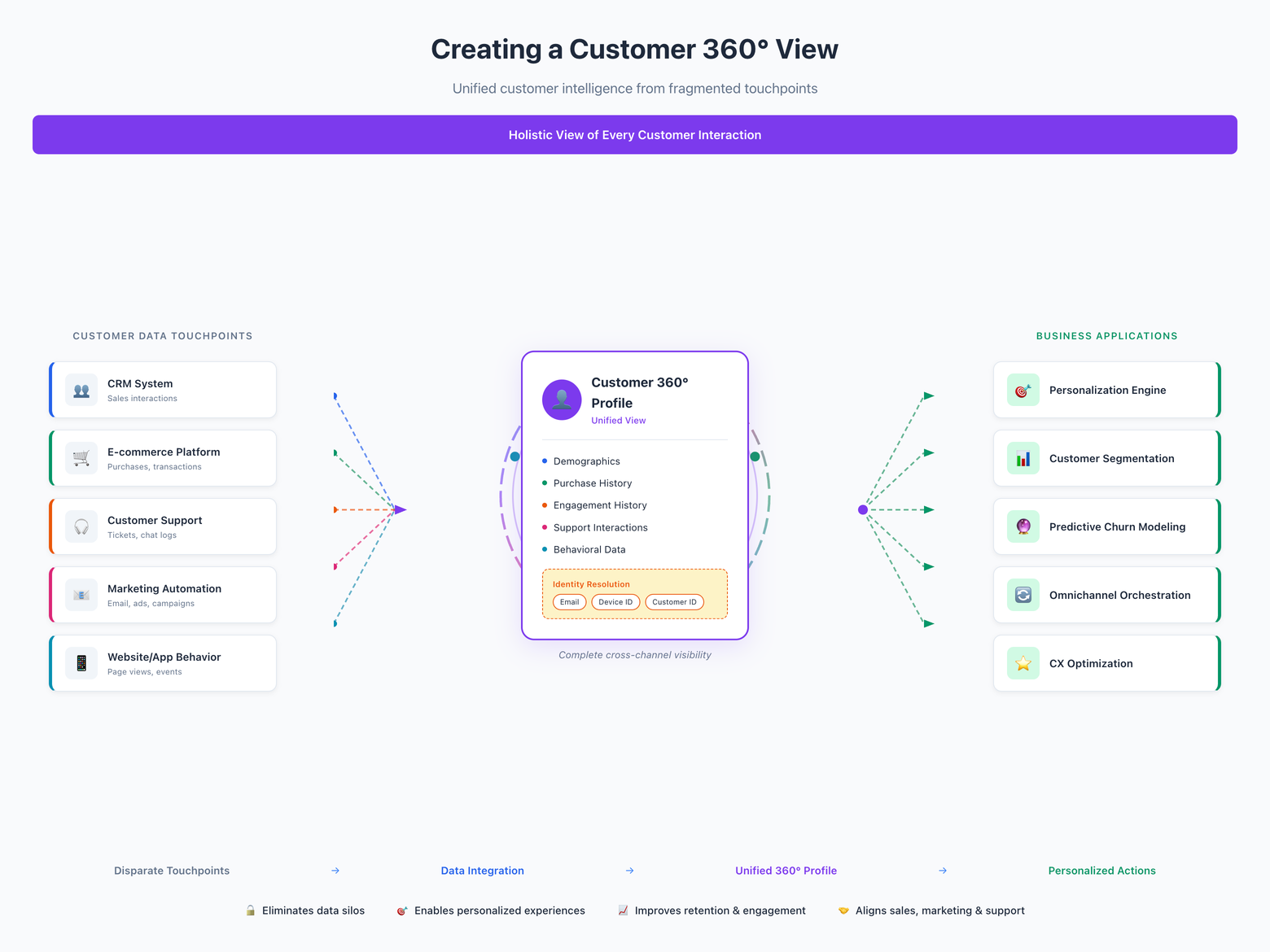

Creating a Customer 360° View

To deliver exceptional customer experiences, businesses need a complete understanding of their customers. Data integration brings together data from CRM, e-commerce, customer support, and marketing platforms to create a holistic "Customer 360" profile, tracking every interaction and touchpoint.

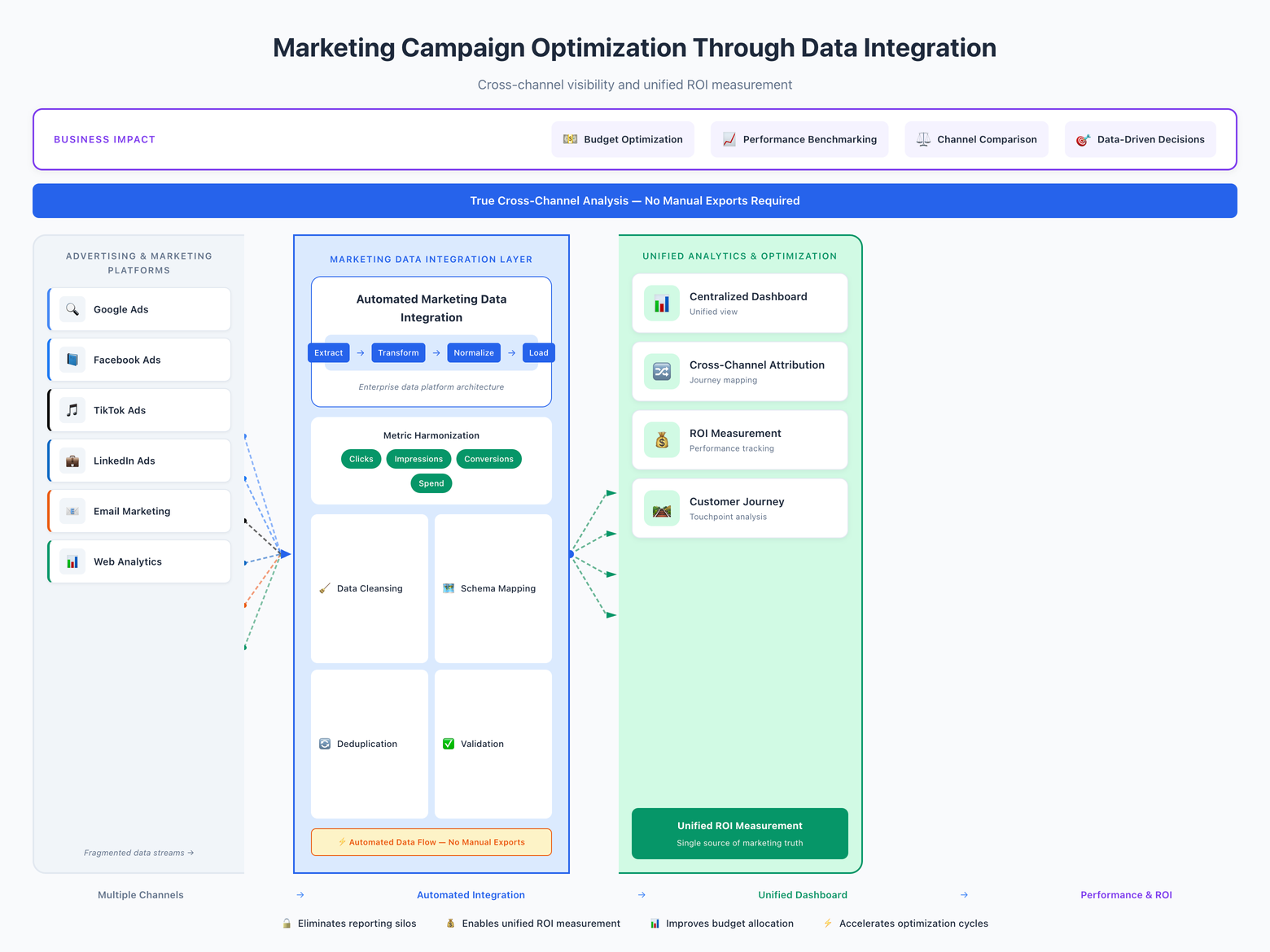

Marketing Campaign Optimization

Marketers use dozens of tools, from advertising platforms to analytics software. Integrating this data provides a unified view of campaign performance and customer journeys across different channels.

For example, an enterprise brand can use a platform like Improvado to automatically pull performance data from every advertising channel (like Google Ads, Facebook Ads, TikTok, etc.) into a single dashboard, enabling true cross-channel analysis and ROI measurement.

Enterprise Resource Planning (ERP)

ERP systems are central hubs for managing core business processes like finance, HR, and supply chain. Data integration connects the ERP to other systems (like CRM or e-commerce platforms), ensuring seamless data flow and process automation across the entire organization.

Common Challenges of Data Integration

While powerful, data integration comes with its own set of challenges that organizations must navigate.

Managing Diverse Data Sources and Formats

Enterprises often pull data from hundreds of sources, including legacy on-premises databases, modern cloud applications, and unstructured files. Each source has a unique data model and format, making consolidation complex and time-consuming.

Ensuring Data Quality and Integrity

Garbage in, garbage out.

If the source data is inaccurate, incomplete, or inconsistent, the integrated data will be unreliable. Implementing robust data validation and cleansing rules is critical but requires significant effort.

Integrating Legacy Systems

Many established companies rely on legacy systems that lack modern APIs. Extracting data from these older, siloed systems can be technically challenging and often requires specialized connectors or custom development.

Scalability and Performance Bottlenecks

As data volumes grow, integration processes can become slow and inefficient, creating bottlenecks that delay access to critical information. Designing data pipelines that can scale to handle large-scale data ingestion and transformation is a major consideration.

Data Security and Compliance

Consolidating sensitive data into a single location increases security risks. Organizations must implement strong access controls, encryption, and governance policies to protect data and comply with regulations like GDPR and CCPA.

Conclusion

Data integration is what turns disconnected data into operational insight. When marketing, sales, and revenue data live in separate systems, analytics becomes reactive and incomplete. Integrating these sources at scale is essential for attribution modeling, performance forecasting, and ROI analysis that leadership can trust.

Improvado solves this by automating data ingestion and transformation across 500+ marketing and analytics sources, mapping metrics through its AI-powered data transformation engine, and delivering governed, warehouse-ready datasets to BigQuery, Snowflake, or Databricks.

Improvado eliminates manual ETL, enforces data consistency, and enables unified analysis of SEO, paid, and revenue metrics within any BI tool.

Connect your fragmented marketing data to business outcomes — book a demo to see Improvado in action.

.png)

.png)