Gathering marketing data is hard work. Analyzing it manually is even harder.

And that’s why AI tools like ChatGPT, Perplexity, and other Large Language Models (LLMs) are so appealing since they significantly speed up analysis and make insights more accessible.

But there’s a problem: these tools typically run outside your organization’s secure infrastructure.

Feeding them marketing data means handing your sensitive information over to external platforms with unclear data boundaries. You lose control over where your data goes, how it’s used, and who sees it.

This heightens the risks for regulatory violations, data leaks, and exposure of proprietary insights that could be copied or misused.

To prevent this, you need a clear AI acceptable use policy and operational checklists that define what marketing data can and cannot be shared with LLMs.

In this article, we’ll show you how to create one.

Why Does LLM Use in Marketing Need Legal Oversight?

For instance, if you specialize in selling customized t-shirts and want to build precise audience targeting, you’d need insights like buying behavior, demographic preferences, income, and location trends.

Getting the most out of that data requires powerful analytic AI tools like LLMs that can quickly sort through patterns and help you make smarter marketing decisions.

But this requires legal oversight to avoid the following consequences.

1. Unconsented data sharing

Many large language models (LLMs) are designed to collect and use user inputs to improve their future performance by default.

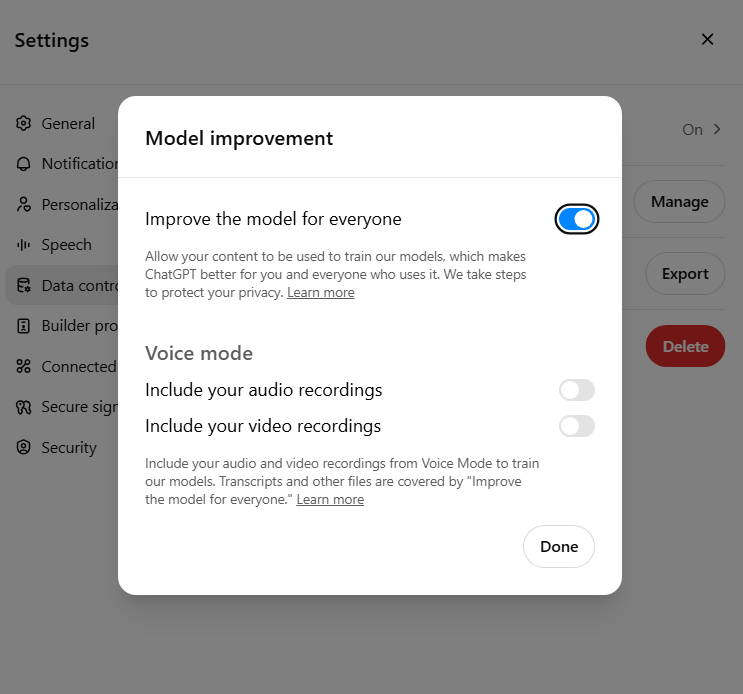

For example, unless you’ve explicitly disabled data sharing or are using the business version of ChatGPT, your inputs may be accessible to AI trainers and could be used to help improve future versions of the model.

If your customers’ data is part of these inputs, this may breach data usage laws, such as the General Data Protection Regulation (GDPR).

2. Unclear data storage and a lack of an audit trail

LLMs often store data on multiple public cloud infrastructures due to the sheer volume and complexity of management. This results in data fragmentation, thereby increasing the risk of a potential breach that could be detrimental to your business.

Besides, you can’t autonomously conduct an audit or track how your sensitive marketing data is accessed, processed, or shared within these environments.

This makes it difficult to investigate incidents, assign accountability, or demonstrate regulatory compliance.

What Can and Cannot Be Input into LLMs?

While the allure of using these LLMs is strong and reasonable, you should draw a clear boundary between what marketing data actually goes in and what does not.

Speaking of what goes in, you can use public LLMs to analyze marketing data that does not threaten your business’s core marketing strategy. This includes:

- Publicly available information, including your business news, reports, and market trends.

- Aggregated, anonymized marketing data without personal identifiers.

- Non-confidential product descriptions or promotional content.

- Data that has been approved by legal/compliance teams.

However, you can’t randomly feed the following into any Artificial Intelligence outside your company’s marketing data governance:

- Personally Identifiable Information (PII) of customers or employees, such as names, emails, phone numbers, addresses.

- Confidential business information like trade secrets, pricing models, and unique marketing strategies.

- Proprietary data protected by NDAs or contracts.

- Sensitive financial or legal documents.

- Any data restricted by data privacy laws, including GDPR and HIPAA.

- Raw datasets containing personal or confidential details.

Copying and pasting any of this sensitive data into LLMs becomes legally problematic when the compliance laws governing them are breached.

How to Build an AI Use Policy?

A legal policy for LLM use in marketing analytics is a concise playbook that guides and helps your team adhere to globally accepted data security practices. Here’s what it should contain.

Scope and purpose of LLM use in marketing analytics

Your legal policy must explain the extent and the types of consumer data you will process using LLM models.

Valuable consumer data types in marketing include transactional data, communication data, behavioural data, demographic data, and survey or feedback data.

You also need to spell out why you’re processing them, whether for internal analytics to glean insights, optimize campaigns, generate leads with hit content, and so on.

Data handling and consent protocols

Prior to data collection, your customers must clearly consent to the use of their data inside LLMs.

Your policy must also highlight how each piece of data collected is processed for LLM use. This includes stating if sensitive or personally identifiable information will be anonymized or pseudonymized.

Data minimization and sensitive data restrictions

This prevents unmetered and unnecessary exposure of customers’ sensitive information without justified reasons.

Additionally, your policy should restrict the use of sensitive data, such as payment details, health information, or biometric identifiers, unless it is in a secure Learning Language Model environment and with explicit consent.

Accuracy and bias mitigation

While using LLMs for marketing analytics is often faster and more accurate than the manual approach, it’s essential to let your team know that such results can still be prone to errors and bias. Your policy should enforce consistent evaluation of output for fairness across demographics, avoiding stereotypes, and ensuring inclusive language.

Export control and restrictions

Certain types of data, such as customer information from specific countries, sensitive product details, or datasets subject to U.S. Export Administration Regulations (EAR), may be legally required to stay within specific borders.

Highlight these export-controlled or region-locked categories inside your legal policy and restrict your team from using external LLMs to process them, since these platforms export data across different servers in different locations.

Data retention and deletion policy

Indicate how long your customers’ marketing data stays in your storage and when you will delete it. There’s no exact timeline for keeping consumer data, but the General Data Protection Regulation (GDPR) suggests keeping financial data for only six years, and others only for as long as they are needed.

Audit, incident reporting, and breach notification workflow

State clear timelines for auditing how LLMs are being used, what data is being processed, and whether usage aligns with the established legal policy and applicable regulations.

This could be after every use, weekly, monthly, or biannually. Reports from these audits should be sent to the internal compliance officer for corrective actions where necessary.

Your legal policy should also include a breach notification workflow and how your team should respond.

Intellectual property ownership and usage rights

Outputs generated from your analysis belong to your organization, and they can be used for all kinds of marketing purposes, so long as there is no PII or customer-sensitive information there.

Also, the use of these outputs must not infringe on the rights of your customers or the LLM utilized for processing.

Clear contact information for compliance inquiries

Your marketing team members should have direct access to the compliance and data protection units within your organization for inquiries or approvals as needed. So, provide an active contact name, title, email address, and phone number of your compliance officer, in-house lawyer, and or data protection agent on the legal policy.

Where’s the Line Between Legal and Illegal in Copy-Pasting Sensitive Data into LLMs?

The threshold between legal and illegal copy-pasting is just a thin line created by the following compliance and contextual factors:

- There is legal and explicit content from your customers to use LLMs in processing their data.

- Identity of data subject (PII) is anonymized or pseudonymized.

- The LLM used is hosted in a secure or private environment, and there’s no need for data transfer to external servers.

- The use is clearly within the purpose for which the data was originally collected.

- The LLM provider has data processing agreements (DPAs) in place and guarantees no data retention, training, or cross-border transfers in violation of privacy laws.

Outside these borders, your use effectively becomes illegal.

Operational Checklists for LLM Use in Marketing Analytics

Let’s get down to some of these operational checklists to put in place.

1. Classify data sensitivity levels before input

First, categorize the sensitivity of your corporate marketing data as low, moderate, or high. This helps you assess the risk levels and determine the necessary precautions to take.

Low sensitivity level includes datasets that are generally safe to input:

- Publicly available marketing content, like blog posts and ad copies,

- Market research reports from public sources,

- Industry benchmarks or trend data,

- General strategy questions or idea brainstorming,

- Common FAQs or customer support scripts without PII.

Moderately sensitive data is more internally linked to your marketing operations:

- Aggregated marketing performance data for your brand,

- Shareable internal process documents without sensitive identifiers,

- Vendor pricing or non-public campaign metrics,

- Customer feedback datasets without identifiers,

- Internal training materials.

Highly sensitive datasets contain extremely personal details of your customers and possible trade secrets:

- Payment information, including credit card numbers, bank details,

- Personally Identifiable Information (PII) like names, emails, phone numbers, addresses,

- Health records are protected under HIPAA or similar laws,

- Legal contracts, NDAs, or litigation data,

- Trade secrets or unreleased product information,

- Internal financial statements or forecasts.

2. Obtain legal and compliance approval for use cases

You also need a Compliance or Data Protection Officer (DPO) who will ensure your use of marketing data on LLMs follows critical regulations like GDPR.

IT security teams, including a Chief Information Security Officer (CISO), are likewise crucial in ensuring your data use does not constitute a technical vulnerability.

All these teams keep your marketing department and their usage of LLMs for analyzing marketing data in check.

3. Use internal/private LLM environments only

Public LLMs, such as OpenAI's ChatGPT, send your marketing data to external servers owned and managed by private third-party companies. Using them also means leaving your data in an environment you have no control over—no personal oversight, no auditing power, and no say in determining the security of the servers.

That’s why you need to switch to internal or private LLM environments, if at all, you need to use AI to analyze highly sensitive data. An example of such an environment is Improvado AI Agent.

Improvado AI Agent provides a private and compliant LLM environment purpose-built for enterprise marketing analytics. Unlike public AI tools, Improvado operates within strict security frameworks, compliant with SOC 2, HIPAA, and GDPR, ensuring sensitive marketing and business data remains protected.

For organizations with stringent data governance requirements, the AI Agent can run directly on top of your data warehouse, eliminating the need to move or replicate data outside your infrastructure.

This architecture enables enterprises to leverage AI-driven insights, anomaly detection, and natural language querying while maintaining full control over their data perimeter, ensuring both accuracy and compliance.

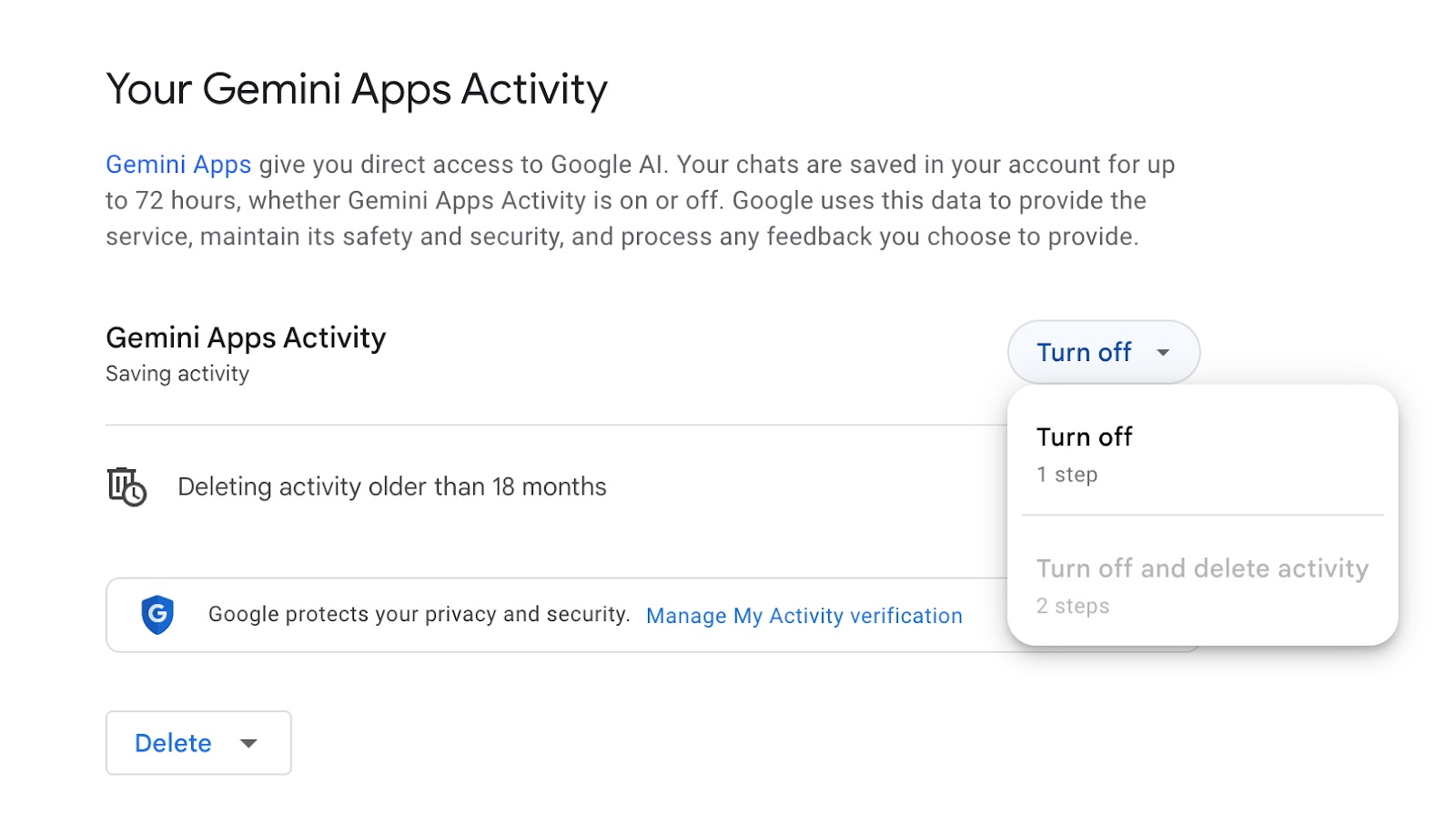

4. Disable chat history and data sharing

Similar to how businesses maintain compliance and data integrity by securely archiving emails for legal and operational purposes, many LLMs might log your interactions either for future reference or for training.

This default behavior presents a significant data security risk, particularly when dealing with sensitive marketing analytics data.

Therefore, if your organization is unable to implement a private LLM environment and must utilize public LLM models like Gemini, it is absolutely critical to always disable data retention and sharing features.

This proactive measure prevents your proprietary information from being inadvertently used for model training or stored on external servers beyond your control.

Likewise, don’t forget to delete your sessions after each use to help keep your data secure, whether you’re on a free or paid subscription. Your login to these public LLM environments can be easily compromised if not managed carefully, and that puts your sensitive information at risk.

By the way, you should never input your company’s data on the free version of any LLM.

5. Log all LLM interactions for auditability

Auditing also allows for prompt recognition of policy violations. This enables a proactive and swift response to potential crises arising from misuse, while ensuring compliance with relevant regulations.

6. Define clear escalation paths for misuse

While not so common, any of your marketing team members might end up inserting sensitive or restricted data into a public LLM. For cases like this, there should be a well-defined escalation and crisis management pathway that clearly outlines the immediate actions to be taken.

This can include:

- Deleting the data as soon as it is noticed.

- Removing any archived logs or session memory that may have stored the sensitive input.

- Revoking access to the LLM account temporarily while the incident is reviewed.

- Notifying the legal and compliance teams to assess the severity and next steps.

Documenting the incident entirely for audit and reporting purposes should be done after all these steps.

7. Restrict third-party plugin or API access

For a comprehensive marketing analytics report, you might sometimes want to use a public LLM with third-party plugins to streamline your process.

For instance, combining a data-crunching plugin like Wolfram Alpha with ChatGPT can speed up your work and make your reports cleaner and more impressive.

So, while the convenience is appealing, the cost to data security, marketing compliance, and client trust might be too high, and the option should be avoided altogether.

If you’re dealing with sensitive or regulated information, it’s safer to stick with controlled, internal environments, where you get all the Artificial Intelligence functionality you need without putting your data at risk.

8. Train your employee to adopt the AI acceptable use policy

Creating a corporate AI policy guidelines and instruction playbook surrounding the use of marketing data and large language models (LLMs) is only half the work. The remaining half is to ensure every employee of your organization, not just the marketing team, learns about it and can use it to guide their operations.

Additionally, regulatory requirements and global data security practices are subject to change periodically. It’s therefore essential to update your policy occasionally and keep your organization abreast of these changes.

Wrapping up

Marketing data is the backbone of every campaign. Using LLMs helps you uncover what’s working, what’s not, and how to improve performance. But when you're feeding this data into AI tools, especially public ones, you need clear operational boundaries in place.

Start by classifying your data into low, moderate, and high sensitivity levels. Moderate and high-sensitive data should never be used in public LLMs. Establish a clear legal approval path to ensure marketing and legal teams are aligned before any sensitive data is analyzed.

Train your employees to use private, secure environments. Make it mandatory for users to log all interactions with LLMs—this creates an audit trail that’s useful for tracking and accountability. In case of misuse, whether accidental or intentional, your legal policy should clearly outline the steps to take.

Finally, steer clear of third-party plugins in public LLMs. These tools add more risk.

.png)

.png)