You spend thousands, or even millions, on marketing every month. You see clicks, conversions, and revenue. But a critical question remains: how much of that success did your marketing actually cause? How many of those customers would have converted anyway?

Traditional metrics often mislead. They show correlations, not causation. This is where marketing incrementality changes the game. It is the science of measuring the true, causal impact of your marketing efforts. It isolates the lift generated by a specific campaign or channel, giving you a clear picture of its real value and helping you make smarter, data-driven decisions.

Key Takeaways:

- Incrementality answers "what would have happened anyway?" by isolating the true lift from a marketing activity, unlike attribution which only assigns credit.

- The core of incrementality measurement is comparing a group that sees your marketing (test group) to a similar group that doesn't (control group).

- By focusing on incremental lift, you can calculate a more accurate Return on Ad Spend (iROAS) and stop wasting budget on non-impactful campaigns.

- Accurate incrementality testing requires clean, unified data from all your marketing platforms, a significant challenge for most organizations.

What Is Marketing Incrementality?

Marketing incrementality is a measurement approach that determines the causal effect of a marketing campaign. It isolates the outcomes (like sales or sign-ups) that happened only because of your marketing intervention. It separates these results from outcomes that would have occurred organically.

The Core Question: "What Would Have Happened Anyway?"

Imagine you run a Facebook ad campaign. At the end of the month, you see 1,000 sales from people who saw the ad. It’s tempting to credit all 1,000 sales to the campaign.

However, incrementality forces you to ask a tougher question. How many of those 1,000 people were already loyal customers planning to buy?

How many would have found your site through Google search?

Incrementality measurement is the process of finding the answer.

Moving Beyond Correlation to Causation

Standard marketing reports are full of correlations.

For example, "When we spend more on ads, sales go up." This is a correlation. Incrementality digs deeper to find causation. It proves that "Spending more on ads caused sales to go up by a specific, measurable amount."

This distinction is the key to efficient budget allocation and real growth.

Here’s a simple example.

A local coffee shop sends a "50% off" coupon to half of its loyalty members (the test group). The other half receives nothing (the control group).

At the end of the week, the test group bought 300 coffees. The control group bought 100 coffees. The total lift is 200 coffees (300 - 100).

The 100 coffees bought by the control group represent the baseline, or what would have happened anyway. The 200 extra coffees are the incremental lift directly caused by the promotion.

Incrementality vs. Attribution vs. Marketing Mix Modeling (MMM)

Marketers have several tools to measure performance. Incrementality, attribution, and MMM are the most common.

All three frameworks answer different questions and should be used together for a complete picture. Understanding their differences is crucial for building a sophisticated measurement strategy.

Marketing Attribution: Assigning Credit Along the Path

Attribution models distribute credit for a conversion across various touchpoints in the customer journey.

For example, a last-touch model gives 100% of the credit to the final ad a customer clicked. Multi-touch marketing attribution models spread the credit across all channels in proportion to their contribution to the conversion.

Attribution is great for understanding the path to conversion, but it doesn’t prove if any of those touchpoints were truly necessary to cause the conversion.

Marketing Mix Modeling (MMM): The Top-Down View

MMM is a statistical analysis that uses historical data to show how various marketing inputs affect a business outcome like sales. It looks at a high level, incorporating factors like TV ads, seasonality, and economic trends.

MMM is powerful for long-term strategic planning and budget allocation across broad categories (for example, comparing offline and online). However, it's not granular enough to measure the impact of a single digital campaign.

Incrementality: Isolating Causal Lift

Incrementality focuses on one thing: causation.

It uses controlled experiments to determine the specific lift generated by a single activity. It's the most precise way to answer, "Did this specific ad campaign cause more sales?"

It operates at a granular level, making it perfect for tactical optimization of digital channels.

| Aspect | Incrementality | Marketing Attribution | Marketing Mix Modeling (MMM) |

|---|---|---|---|

| Core Question | Did my marketing cause this outcome? | Which touchpoints get credit for this outcome? | How does my total marketing mix impact outcomes? |

| Methodology | Controlled experiments (Test vs. Control) | Rule-based or algorithmic credit assignment | Top-down statistical regression analysis |

| Focus | Causation | Correlation and Journey Mapping | Correlation and High-Level Impact |

| Granularity | Very high (Campaign, Ad Set, Creative) | Medium (Channel, Touchpoint) | Low (Channel Mix, Geography) |

| Time Frame | Short-term (In-flight campaigns) | Short to medium-term | Long-term (Quarterly, Annually) |

| Primary Use Case | Tactical optimization, budget justification | Channel performance analysis, journey insights | Strategic budget allocation, forecasting |

| Key Output | Incremental Lift, iROAS | Conversions per channel | Contribution of each channel to total sales |

Why Is Incrementality the Gold Standard for Marketers?

Adopting an incrementality mindset is a game-changer for marketing teams. It shifts the focus from vanity metrics to true business impact.

This leads to more efficient spending, better strategies, and demonstrable value for the business.

Unlock True Marketing ROI and ROAS

Standard ROAS can be misleading. It divides total revenue by total ad spend.

Incremental ROAS (iROAS) is far more accurate. It divides only the incremental revenue by the ad spend. This tells you the real return your ad dollars are generating.

By measuring incremental ROAS, marketers can optimize budget allocation to focus on high-performing campaigns with proven incremental lift, improving overall marketing efficiency and return.

Eliminate Wasted Ad Spend

Incrementality testing often reveals surprising truths. You might discover that a retargeting campaign is simply reaching customers who would have bought anyway. Or a branded search campaign is capturing demand that your organic presence would have captured for free.

By focusing on incremental ROAS, marketers avoid scaling campaigns that look successful in traditional ROAS terms but are mainly cannibalizing existing sales or benefiting from organic demand.

This leads to more efficient budget allocation, concentrating investments on campaigns that produce real, measurable growth, thus minimizing spend on ineffective or redundant ads.

Make Smarter Budget Allocation Decisions

When you know the incremental lift of each channel and campaign, budget allocation becomes a science, not a guessing game. You can confidently shift funds from low-incrementality tactics to high-incrementality tactics.

This data-driven approach ensures every dollar is working as hard as possible to grow the business.

Gain Deeper Customer Behavior Insights

Incrementality tests reveal how different audiences respond to your marketing. You might learn that ads are highly effective for new customers but have little impact on existing ones. Or you might find that a discount offer is the only thing that moves a certain segment.

These insights go beyond simple performance metrics, helping you build more effective, personalized marketing strategies.

The Complete Guide to Measuring Incrementality: Methods & Models

Measuring incrementality isn't a single action; it's a discipline supported by several established methodologies. The right choice depends on your channel, budget, and the specific question you're trying to answer. All methods, however, are built on the same fundamental principle.

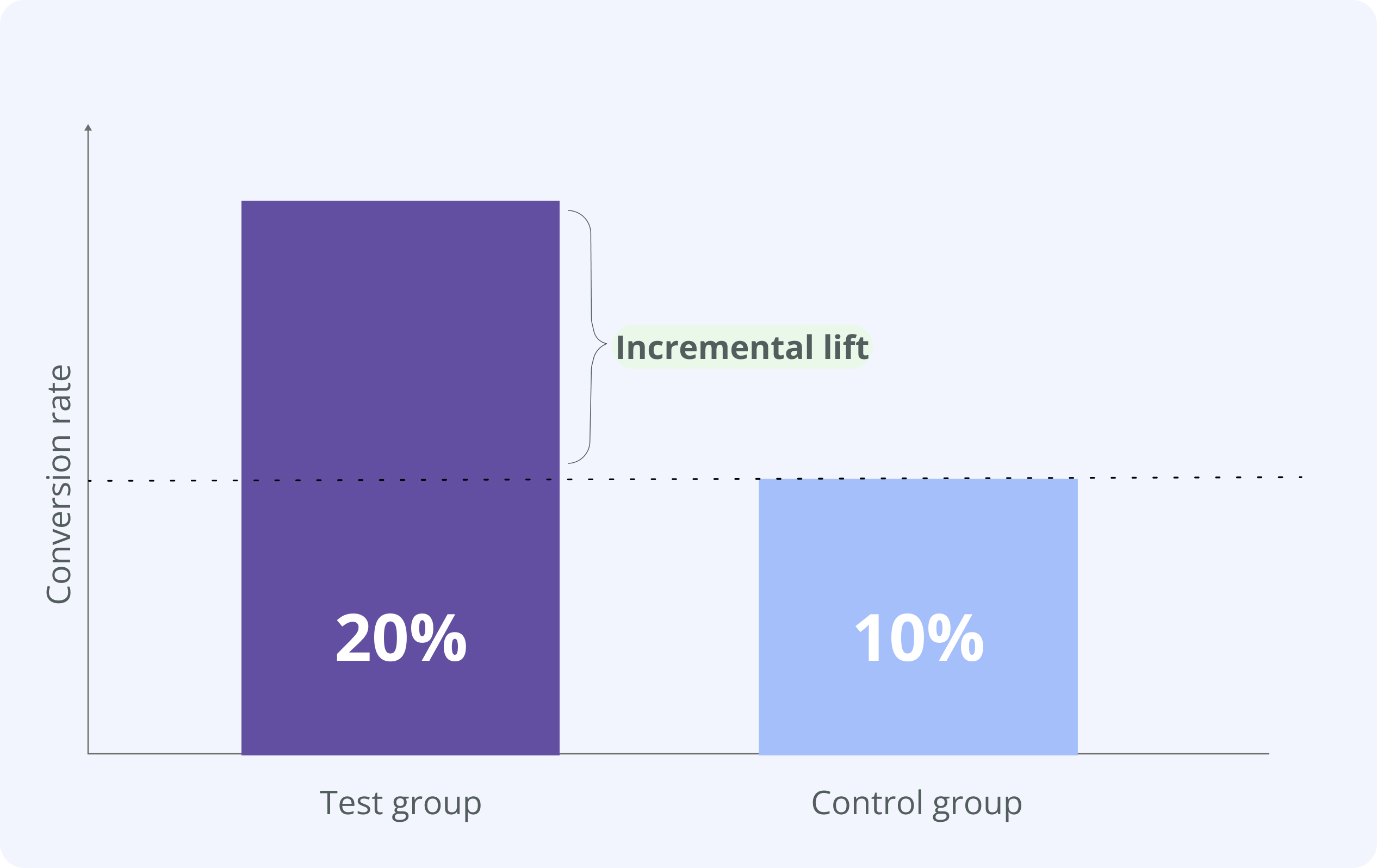

The Foundation: Test vs. Control Groups

Every valid incrementality test relies on creating two groups:

- Test group (or treatment group): This segment of your audience is exposed to the marketing activity you want to measure (for example, they see the ad).

- Control group (or holdout group): This segment is intentionally withheld from the marketing activity. They are statistically identical to the test group in every other way.

The difference in outcomes between the test group and the control group is the incremental lift.

Method 1: Randomized Controlled Trials (RCTs)

This is the most scientifically rigorous approach. In an RCT, users are randomly assigned to either the test group or the control group. Randomization ensures that the only systematic difference between the groups is their exposure to your ad.

This makes it the gold standard for establishing a causal link. Many major ad platforms like Meta and Google have built-in lift study tools that use this methodology.

Method 2: Conversion Lift Studies (Platform-Specific)

These are essentially RCTs managed within an ad platform's ecosystem.

For example, a Facebook Conversion Lift Study will create a holdout group of users who are eligible to see your ads but are prevented from doing so. The platform then measures and reports on the difference in conversion rates between those who saw the ads and those in the holdout group.

Conversion lift studies are convenient but operate within the platform's "walled garden."

Method 3: Geo-Lift or Matched Market Testing

Geo-lift tests are ideal for channels that are difficult to measure at a user level, like TV, radio, or billboards. The methodology involves dividing geographic areas (like cities or states) into test and control groups.

The test markets receive the advertising, while the control markets do not. The incremental lift is measured by comparing the change in KPIs (for example, sales) in the test markets versus the control markets during the campaign period.

Method 4: Causal Inference Models (Advanced)

When a true RCT is not possible, data scientists can use advanced statistical models to estimate incrementality. Techniques like "Difference-in-Differences" or "Propensity Score Matching" use observational data to create a synthetic control group.

These methods are complex and require significant data and expertise, but they can provide valuable directional insights when experimental options are limited.

| Aspect | Randomized Controlled Trial (RCT) | Platform Lift Study | Geo-Lift Test | Causal Inference Model |

|---|---|---|---|---|

| Accuracy | Very High (Gold Standard) | High (Within Platform) | High (For Mass Media) | Medium to High (Depends on model) |

| Complexity | High (Requires careful setup) | Low (Platform-managed) | Medium (Requires clean geo data) | Very High (Requires data science) |

| Cost | Medium (Opportunity cost of holdout) | Low (Included in ad spend) | High (Requires significant spend) | Medium (Data science resources) |

| Best For | Digital channels, strategic questions | Optimizing campaigns on Meta, Google, etc. | TV, Radio, OOH, cross-channel effects | When experiments are not feasible |

| Data Requirement | User-level exposure and conversion data | Platform's internal data | Aggregated sales data by geography | Large, granular historical datasets |

How to Design and Run an Incrementality Test (Step-by-Step)

Running a successful incrementality test requires careful planning and execution. Here’s a six-step framework for your next incrementality test.

Step 1: Define Your Hypothesis and KPIs

Start with a clear question. What exactly are you trying to prove?

A good hypothesis is specific and measurable. For example: "Running our new video ad campaign on YouTube will cause a 10% increase in new user sign-ups compared to not running the campaign."

Your key performance indicator (KPI) here is "new user sign-ups."

Step 2: Choose the Right Testing Methodology

Based on your hypothesis and the channel you're testing, select the appropriate method from the list above.

For example:

- If you're testing a Facebook campaign, a platform Conversion Lift Study is a great choice.

- If you're testing a new TV spot, a Geo-Lift test is the way to go.

- For broader market-level campaigns, Matched Market Testing compares similar but separate markets running campaign vs no campaign to gauge lift.

- For time-based campaigns, Time-Based Holdout Testing compares performance during active campaign periods to paused or prior periods to identify incremental effects.

Step 3: Select Your Audience and Create Groups

Define the audience for your test. Then, split this audience into a test group and a control group. The split should be random to avoid bias.

A typical split is 90% test and 10% control, but this can vary.

The key is that the control group must be large enough to provide a stable baseline.

Step 4: Ensure Statistical Significance

Before launching, calculate the required sample size and test duration to achieve statistical significance. This means your results are unlikely to be due to random chance.

If your audience is too small or the test is too short, you might not be able to detect a real lift, leading to a false negative conclusion.

There are many online calculators that can help with this.

Step 5: Execute the Test and Collect Data

Launch the campaign. The test group sees the ads, and the control group does not. It is critical to maintain the integrity of the experiment during this phase.

Avoid making other major marketing changes that could contaminate the results. All the while, you need to collect clean performance data for both groups.

Step 6: Analyze the Results and Calculate Lift

Once the test is complete, compare the conversion rate (or your chosen KPI) of the test group to the conversion rate of the control group. The difference between the two is your incremental lift.

Analyze the results to determine if your hypothesis was correct and if the lift was statistically significant.

Calculating Incremental Lift: Formulas and Examples

Once your test is complete, the final step is to quantify the results. The calculations are straightforward and provide the clear, actionable metrics leadership wants to see.

The Basic Incremental Lift Formula

The core formula measures the percentage increase in your KPI caused by the marketing activity.

Incremental Lift % = [(Test Group Conversion Rate / Control Group Conversion Rate) - 1] * 100

If your test group converted at 2% and your control group converted at 1.5%, the lift is [(2.0 / 1.5) - 1] * 100 = 33.3%.

Calculating Incremental Conversions

This tells you the raw number of extra conversions your campaign generated.

Incremental Conversions = (Test Group Conversion Rate - Control Group Conversion Rate) * Number of People in Test Group

Using the example above, with 100,000 people in the test group: (0.02 - 0.015) * 100,000 = 500 incremental conversions.

Calculating Incremental ROAS (iROAS)

This is the ultimate metric for proving value. It measures the return specifically on the incremental revenue.

Incremental Revenue = Incremental Conversions * Average Order Value

iROAS = Incremental Revenue / Ad Spend

If your AOV is $100 and you spent $10,000: Incremental Revenue = 500 * $100 = $50,000. Your iROAS = $50,000 / $10,000 = 5.0x.

Common Pitfalls and Challenges in Incrementality Testing

While powerful, incrementality measurement is not without its challenges. Being aware of these common pitfalls can help you design better tests and interpret your results more accurately.

Many of these issues stem from fragmented data.

Data Contamination and Audience Overlap

The biggest threat to a clean test is contamination. This happens when members of your control group are inadvertently exposed to the ad you are testing, perhaps through another channel or device.

This "leaking" can shrink your measured lift and make a successful campaign appear ineffective. Careful test design is needed to minimize this.

Achieving Statistical Significance with Small Budgets

To get a reliable result, you need a certain number of conversions in both your test and control groups.

For businesses with smaller budgets or lower conversion volumes, running a test long enough to reach statistical significance can be expensive or time-consuming. This can make incrementality testing challenging for smaller advertisers.

The "Holdout Effect" on Control Groups

Intentionally withholding advertising from a control group has an opportunity cost. You are potentially losing sales from that group during the test period. Businesses must be comfortable with this short-term trade-off to gain long-term strategic insights.

This is often a point of contention between marketing and finance teams.

Measurement in "Walled Gardens"

Platforms like Google and Meta offer their own lift study tools. While convenient, they only measure the impact within their own ecosystem. They cannot easily measure the cross-channel effects of your campaigns.

For example, a Facebook campaign might drive incremental sales through Google Search, which a standard Facebook lift study would miss.

Data Fragmentation Across Platforms

To run sophisticated tests and analyze results, you need data from multiple sources: ad platforms, analytics tools, and your CRM. This data is often siloed and in different formats.

Manually stitching it together is time-consuming and error-prone. This is the single biggest operational barrier to adopting incrementality measurement at scale.

The Role of a Unified Data Platform in Incrementality

The challenges of incrementality testing highlight a central truth: you cannot build a reliable measurement practice on a foundation of messy, siloed data. A unified marketing data platform is the key to unlocking scalable and accurate incrementality measurement.

A unified data foundation removes the operational overhead that slows teams down: manually pulling pre-test baselines, harmonizing cost data, reconciling conversions across platforms, and stitching together experiment and control group performance. With the right platform in place, incrementality becomes a repeatable measurement discipline rather than an ad-hoc analytical project.

Improvado provides the end-to-end data infrastructure required to support robust incremental lift analysis. By automating data collection, normalization, and modeling, the platform gives analysts and media teams a stable foundation for designing, executing, and interpreting tests.

Key capabilities include:

- Historical data extraction across all channels: Retrieve multi-year cost, impression, click, and conversion data to build accurate pre-test baselines.

- Automated normalization of KPIs and taxonomies: Align definitions across platforms (e.g., cost, conversions, revenue) to ensure test and control groups share consistent measurement frameworks.

- Cross-channel entity modeling: Connect campaign, audience, and conversion data into unified entities, critical for measuring impact across multiple touchpoints.

- Time-series alignment and data stitching: Clean and merge data with consistent time zones, attribution windows, and granularity to support valid statistical comparisons.

- Centralized test-result reporting: Consolidate lift results, confidence intervals, holdout performance, and incremental ROAS into structured, analysis-ready datasets.

- Integration with BI tools and modeling environments: Push clean experiment data directly into tools like BigQuery, Snowflake, Tableau, or Looker for deeper analysis.

By providing a complete upstream data foundation, Improvado enables marketing teams to focus on the experiment, not the hours of manual data work behind it.

Conclusion

Marketing incrementality is a fundamental shift in how marketers measure success. It moves us away from ambiguous correlations and toward the clarity of causation. By asking "what would have happened anyway?" you unlock a more profound understanding of your marketing's true impact.

The journey starts with understanding the core concepts and methodologies. It progresses through disciplined testing and rigorous analysis. The ultimate goal is to build an "always-on" culture of experimentation where every significant marketing dollar is justified by the incremental value it creates.

.png)

.png)