Updated on

Jan 8, 2026

Explore the 7 Core Capabilities.

Some code here

const value = 0;{

"description": "this is a json format with correct syntax highlight",

"Version": "23Q1"

}| Recipe | Data source |

|---|---|

| Paid Ads | ```paid_ads__basic_performance``` |

| Recipe | Data source |

|---|---|

| Paid Ads | ```paid_ads__basic_performance``` |

| Recipe | Data source |

|---|---|

| Paid Ads | ```paid_ads__basic_performance``` |

{% docs-informer info %}

To better understand the ```Improvado Marketing Common Data Model``` and how it works, you can check the Improvado MCDM Overview.

{% docs-informer-end %}

{% docs-informer warning %}

To better understand the ```Improvado Marketing Common Data Model``` and how it works, you can check the Improvado MCDM Overview.

{% docs-informer-end %}

{% docs-informer error %}

To better understand the ```Improvado Marketing Common Data Model``` and how it works, you can check the Improvado MCDM Overview.

{% docs-informer-end %}

{% docs-informer info %}

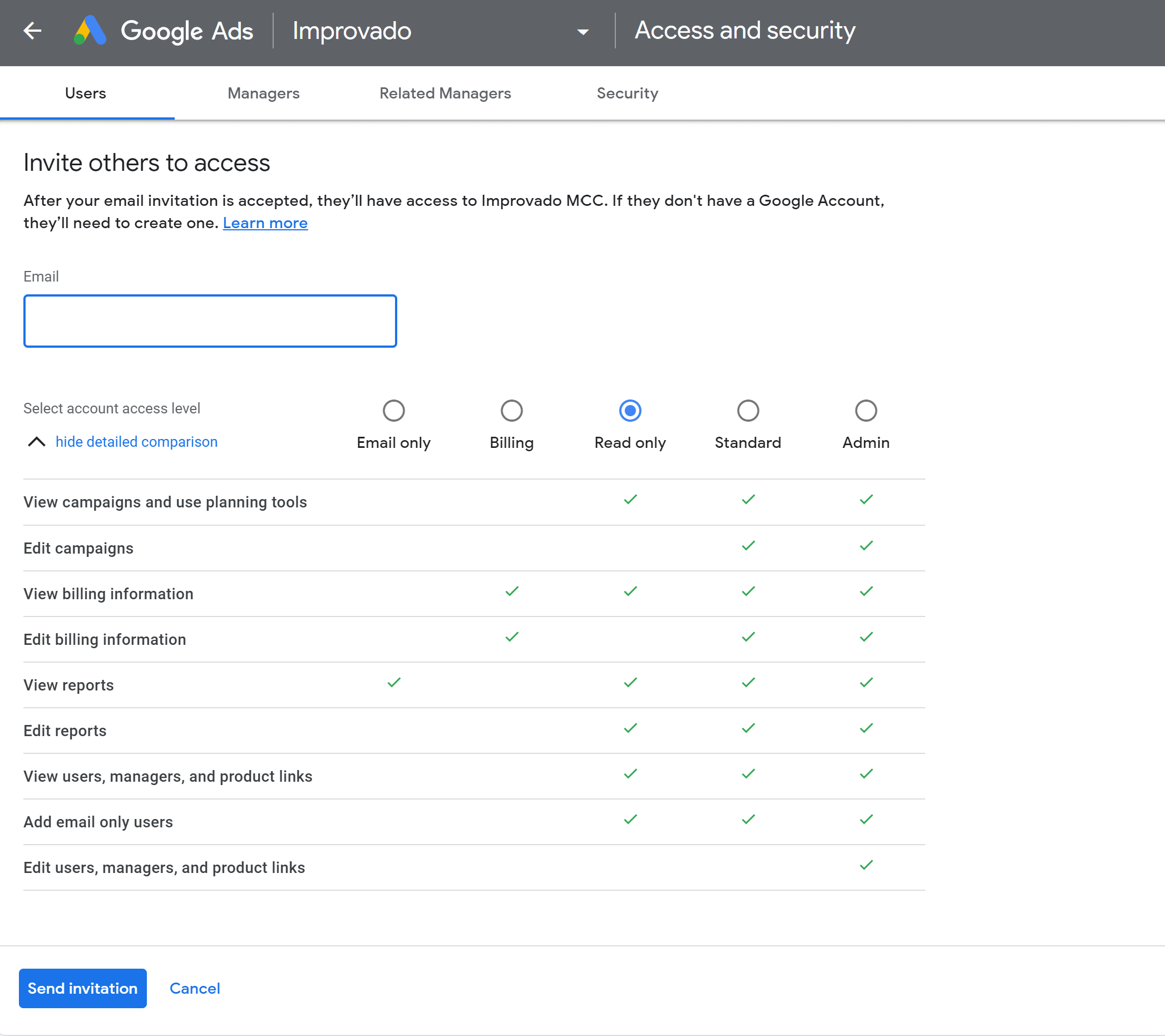

Improvado doesn’t perform any edit, create, or delete operations. To ensure the security of your Google Ads account, we recommend creating a new user or updating an existing one with read-only access to be used for data extraction:

{% docs-informer-end %}

{% docs-informer info %}

Improvado doesn’t perform any edit, create, or delete operations.

| sql_field_name |

|---|

| ```spend / 1.15``` |

| ```'FB'``` |

| ```''``` |

| ```0.0``` |

| ```CASE WHEN campaign_name like '%IG%' THEN 'IG Campaign' ELSE campaign_name END``` |

{% docs-informer-end %}

Step 1. Step 1

Step 2. Step 2

Step 2.1. Step 2.1

Step 3. Step 3

{%docs-accordion title="How does Data Extraction work?"%}

Step 1. Step 1

Step 2. Step 2

{%docs-accordion-end%}

{%docs-accordion title="Solution"%}

When establishing a connection to your Google Big Query, the following error message is displayed:

{%docs-informer error%}

Checking connection failed: Access denied: Dataset <your-project-name>:<your-dataset>: Permission bigquery.tables.create denied on dataset <your-project-name>:<your-dataset> (or it may not exist).{%docs-informer-end%}

{%docs-accordion-end%}

Testing Block Quote

Select the Use static IP option from the dropdown. {%dropdown-button name="use-static-ip" %}

{%dropdown-body name="use-static-ip" %}

Yes

{%dropdown-end%}

{%dropdown-body name="destination" %}

Yes

{%dropdown-end%}

Testing text below

| Name | Type | Status | Tags | Description |

|---|---|---|---|---|

| use_workspace_separate_schemas src_improvado_dataflow_run_events |

column | In progress | technical_field | Flag for using separate database for workspace |

| is_dataflow_run_complete src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics |

Data flow completion flag (1 - completed, 0 - not completed). Useful to filter dataflow run events or aggregate statuses over dataflows or dataflow runs.

select

@dataflow_id,

max(@dataflow_run_event_time, @is_dataflow_run_complete) as last_completed_at

from

@src_improvado_dataflow_run_events

|

| dataflow_run_delay_after_eta_seconds src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics |

Dataflow run’s delay after the expected time in seconds. Useful to track data latency over dataflows.

select

@dataflow_id,

avg(@dataflow_run_delay_after_eta_seconds) as avg_dataflow_latency

from

@src_improvado_dataflow_run_events

where

@is_dataflow_run_complete

group by

@dataflow_id

|

| dataflow_run_duration_seconds src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Dataflow run execution duration in seconds. This is a cumulative metric for dataflow run, so it makes sense to use with aggregation functions like max(@dataflow_run_duration_seconds) or argMax(@dataflow_run_event_time) and group by @dataflow_run_id |

| dataflow_run_event_updated_rows src_improvado_dataflow_run_events |

column | In progress | technical_field | Number of rows updated in data table by data flow event |

| dataflow_run_event_inserted_rows src_improvado_dataflow_run_events |

column | In progress | technical_field | Number of rows inserted into data table by data flow event |

| dataflow_run_schedule_item_time src_improvado_dataflow_run_events |

column | In progress | technical_field | Scheduled item time (technical) |

| dts_agency_data_delivery_timezone_utc_offset src_improvado_dataflow_run_events |

column | In progress | technical_field | Agency’s timezone offset from UTC |

| dts_agency_delivery_eta_time_client_time_zone_str src_improvado_dataflow_run_events |

column | In progress | technical_field | Delivery time string in client's timezone |

| dts_agency_data_delivery_timezone src_improvado_dataflow_run_events |

column | In progress | technical_field | Agency data delivery timezone |

| dts_agency_data_delivery_eta_utc src_improvado_dataflow_run_events |

column | In progress | technical_field | Expected data delivery time in UTC |

| dts_agency_whitelabel_host src_improvado_dataflow_run_events |

column | In progress | technical_field | Agency white-label host |

| dataflow_schedule_time_string src_improvado_dataflow_run_events |

column | In progress | technical_field | String representation of scheduled time (technical) |

| dataflow_type src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Type of dataflow. Might be one of the list:

|

| dataflow_run_date_to src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | End of data daterange processed by the dataflow run event |

| dataflow_run_date_from src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Start of the data daterange processed by the dataflow run event |

| date src_improvado_dataflow_run_events |

column | Active | technical_field | Record insertion date. Used for debugging |

| __insert_date src_improvado_dataflow_run_events |

column | Active | technical_field | Record insertion time in UTC. Used for debugging. |

| dataflow_run_eta_time src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Expected dataflow run completion time in UTC. Used to calculate @dataflow_run_delay_after_eta_seconds as difference between maxIf(@dataflow_run_event_time, @is_dataflow_run_complete) and @dataflow_run_eta_time |

| dataflow_run_started_time src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Actual start time of the dataflow run. |

| dataflow_run_schedule_time src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Planned start time of the dataflow run in UTC. |

| dataflow_run_event_publish_time src_improvado_dataflow_run_events |

column | Active | technical_field | Internal time of dataflow event publication in UTC. Used for debugging. |

| dataflow_run_event_time src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Time of the data flow event occurrence in UTC. Often used within aggregation functions to produce metrics. |

| datasource_connection_uid src_improvado_dataflow_run_events |

column | In progress | technical_field | Unique identifier for connection credentials |

| datasource_connection_id src_improvado_dataflow_run_events |

column | In progress | technical_field | Data source connection identifier |

| datasource_remote_account_id src_improvado_dataflow_run_events |

column | Active | technical_field | Remote account identifier of the data source. |

| Name | Type | Status | Tags | Description |

|---|---|---|---|---|

| use_workspace_separate_schemas src_improvado_dataflow_run_events |

column | In progress | technical_field | Flag for using separate database for workspace |

| is_dataflow_run_complete src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics |

Data flow completion flag (1 - completed, 0 - not completed). Useful to filter dataflow run events or aggregate statuses over dataflows or dataflow runs.

select

@dataflow_id,

max(@dataflow_run_event_time, @is_dataflow_run_complete) as last_completed_at

from

@src_improvado_dataflow_run_events

|

| dataflow_run_delay_after_eta_seconds src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics |

Dataflow run’s delay after the expected time in seconds. Useful to track data latency over dataflows.

select

@dataflow_id,

avg(@dataflow_run_delay_after_eta_seconds) as avg_dataflow_latency

from

@src_improvado_dataflow_run_events

where

@is_dataflow_run_complete

group by

@dataflow_id

|

| dataflow_run_duration_seconds src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Dataflow run execution duration in seconds. This is a cumulative metric for dataflow run, so it makes sense to use with aggregation functions like max(@dataflow_run_duration_seconds) or argMax(@dataflow_run_event_time) and group by @dataflow_run_id |

| dataflow_run_event_updated_rows src_improvado_dataflow_run_events |

column | In progress | technical_field | Number of rows updated in data table by data flow event |

| dataflow_run_event_inserted_rows src_improvado_dataflow_run_events |

column | In progress | technical_field | Number of rows inserted into data table by data flow event |

| dataflow_run_schedule_item_time src_improvado_dataflow_run_events |

column | In progress | technical_field | Scheduled item time (technical) |

| dts_agency_data_delivery_timezone_utc_offset src_improvado_dataflow_run_events |

column | In progress | technical_field | Agency’s timezone offset from UTC |

| dts_agency_delivery_eta_time_client_time_zone_str src_improvado_dataflow_run_events |

column | In progress | technical_field | Delivery time string in client's timezone |

| dts_agency_data_delivery_timezone src_improvado_dataflow_run_events |

column | In progress | technical_field | Agency data delivery timezone |

| dts_agency_data_delivery_eta_utc src_improvado_dataflow_run_events |

column | In progress | technical_field | Expected data delivery time in UTC |

| dts_agency_whitelabel_host src_improvado_dataflow_run_events |

column | In progress | technical_field | Agency white-label host |

| datasource_account_name src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Data source account name. Useful to track dataflow metrics over datasource accounts |

| datasource_name src_improvado_dataflow_run_events |

column | In progress | technical_field | Data source internal name |

| datasource_title src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Data source name. Useful to track dataflow metrics over datasources |

| workspace_title src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Workspace name. Might be useful to aggregate dataflow metrics by workspaces |

| dts_datatable_title src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Title of the datatable related to dataflow. One use case is to aggregate dataflow run’s metrics over datatable title.

select

@dts_datatable_title,

@dataflow_id,

@datasource_account_name,

maxIf(@dataflow_run_event_time, @is_dataflow_run_complete) as last_completed_at

from

@src_improvado_dataflow_run_events

group by

@dts_datatable_title

|

| dataflow_run_event_message src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Data flow event message (including errors). Not empty for events with @dataflow_run_event_status = ‘failed’, used for debugging |

| dataflow_run_event_status src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Unmutable status of a dataflow event. Might be ‘success’ or ‘failed’. Useful as aggregated metric to track failures over dataflows. |

| dataflow_run_event_name src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Name of the dataflow run event. Might be one of the following:

|

| dataflow_run_start_reason src_improvado_dataflow_run_events |

column | Active | good_for_adhoc_analytics | Reason for starting the dataflow run. Might be one of the following:

|

| dataflow_run_name src_improvado_dataflow_run_events |

column | Active | technical_field | Composite key of the dataflow run. Used for debugging. |

| Name | Type | Status | Tags | Description |

|---|---|---|---|---|

| src_improvado_dataflow_run_events | data_product, dbt_model | Active | Data mart of ETL dataflow runs within workspace. Served as append-only event log. Source of the Dataflow Dashboard Every row is an unique event of particular order execution attributed to ETL dataflow. Basically this data is used for custom ETL analytics. For example such query might be used to determine current status of all dataflows: select dataflow_id, max(dataflow_run_started_time) as last_run_start_timestamp, max(dataflow_run_event_time) as last_timestamp, argMax(dataflow_run_event_name, dataflow_run_event_time) as last_event_name, maxIf(dataflow_run_event_time, is_dataflow_run_complete) as last_completion_timestamp, argMax(dataflow_run_duration_seconds, dataflow_run_event_time) as last_dataflow_run_duration_seconds from src_improvado_dataflow_run_events group by dataflow_id |

|

| dataflow_id | column | Active | good_for_adhoc_analytics | Unique identifier of the data flow. This is a composite key that consists of the order type abbreviation (E - extraction, L - load, etc) and @order_id. If a dataflow consists of multiple orders, the key combines them through "_" in the correct sequence. For example, let's say a dataflow consists of an extract order with @order_id = 1231, the result of which is uploaded by a load order with @order_id = 3431. For such a dataflow, the @dataflow_id would look like E1231_L3431. Commonly used as a grouping key when we are interested in overall dataflow metrics. For example, the time of the last successful update: select @dataflow_id, maxIf(@dataflow_run_event_time, @is_dataflow_run_complete) as last_succesful from @src_improvado_dataflow_run_events group by @dataflow_id |

| dataflow_run_id | column | Active | good_for_adhoc_analytics | Unique identifier of the dataflow run. The dataflow run is a single instance of dataflow execution. This identifier is typically used as a grouping key to obtain aggregated metrics. For example, to calculate the average dataflow execution time: with completed_dataflow_run_metrics as ( select @dataflow_run_id, max(@dataflow_run_duration_seconds) as dataflow_run_completion_duration_seconds from src_improvado_dataflow_run_events where @is_dataflow_run_complete group by @dataflow_run_id ) select avg(dataflow_run_completion_duration_seconds) from completed_dataflow_run_metrics; |

| order_id | column | Active | technical_field | Identifier of the ETL order. Foreign key for orders tables like ‘extract_orders’, ‘load_orders’, etc |

| agency_id | column | Active | technical_field | Agency identifier. Used for debugging. |

| workspace_id | column | Active | technical_field | Workspace identifier. Allows to filter or aggregate data by workspace, in case of having multiple workspaces |

| agency_uuid | column | Active | technical_field | Agency UUID. Used for debugging only. |

| dts_datatable_id | column | Active | technical_field | Data table identifier. Foreign key for @src_improvado_datatables. Take a look on @dts_datatable_title for filtering or aggregation by specific data table |

| datasource_remote_account_id | column | Active | technical_field | Remote account identifier of the data source. Foreign key for @src_improvado_datasource_accounts |

| datasource_connection_id | column | In progress | technical_field | Data source connection identifier |

| datasource_connection_uid | column | In progress | technical_field | Unique identifier for connection credentials |

| dataflow_run_event_time | column | Active | good_for_adhoc_analytics | Time of the data flow event occurence in UTC. We recommend to use this field in WHERE clause to speed up query execution. Often used within aggregation functions to produce metrics. See examples in @dataflow_id, @dataflow_run_id descriptions |

| dataflow_run_event_publish_time | column | Active | technical_field | Internal time of dataflow event publication in UTC. Might be slightly after the @dataflow_run_event_time which indicates latency between event ocurrence and publication within Improvado internal system. Used for debugging. |

| dataflow_run_schedule_time | column | Active | good_for_adhoc_analytics | Planned start time of the dataflow run in UTC. In case of scheduled order execution (see @dataflow_run_start_reason) shows the time of dataflow run start time by schedule. Might be different from @dataflow_run_started_time |

| dataflow_run_started_time | column | Active | good_for_adhoc_analytics | Actual start time of the dataflow run. |

| dataflow_run_eta_time | column | Active | good_for_adhoc_analytics | Expected dataflow run completion time in UTC. Used to calculate @dataflow_run_delay_after_eta_seconds as difference between maxIf(@dataflow_run_event_time, @is_dataflow_run_complete) and @dataflow_run_eta_time |

| __insert_date | column | Active | technical_field | Record insertion time in UTC. Used for debugging. |

| date | column | Active | technical_field | Record insertion date. Used for debugging |

| dataflow_run_date_from | column | Active | good_for_adhoc_analytics | Start of the data daterange processed by the dataflow run event. |

| dataflow_run_date_to | column | Active | good_for_adhoc_analytics | End of data daterange processed by the dataflow run event |

| dataflow_type | column | Active | good_for_adhoc_analytics | Type of dataflow. Might be one of the list: - ‘extraction’ - ‘load’ - ‘extraction_and_load’ |

| dataflow_run_name | column | Active | technical_field | Composite key of the dataflow run. Used for debugging. Consider using @dataflow_run_id as grouping key to aggregate metrics by dataflow runs |

| dataflow_run_start_reason | column | Active | good_for_adhoc_analytics | Reason for starting the dataflow run. Might be one of the list: - manual - scheduled - extraction_completed (for load’s @dataflow_run_event_name with @dataflow_type = ‘extraction_and_load’) - order_created (for the first dataflow run after order creation) |

| dataflow_run_event_name | column | Active | good_for_adhoc_analytics | Name of the dataflow run event. Might be one of: - Extract order started - Extract order range completed (completion of batch/part within extraction) - Extract order complete - Load order started - Load order complete Common usecases are: - metric aggregated by @dataflow_run_id |

| dataflow_run_event_status | column | Active | good_for_adhoc_analytics | Unmutable status of a dataflow event. Might be ‘success’ or ‘failed’ Useful as aggregated metric to track failures over dataflows. |

| dataflow_run_event_message | column | Active | good_for_adhoc_analytics | Data flow event message (including errors). Not empty for events with @dataflow_run_event_status = ‘failed’, used for debugging |

| dts_datatable_title | column | Active | good_for_adhoc_analytics | Title of the datatable related to dataflow. One if usecases is to aggregate dataflow run’s metric over datatable title. select @dts_datatable_title, @dataflow_id, @datasource_account_name, maxIf(@dataflow_run_event_time, @is_dataflow_run_complete) as last_completed_at from @src_improvado_dataflow_run_events group by @dts_datatable_title, @dataflow_id, @datasource_account_name |

| workspace_title | column | Active | good_for_adhoc_analytics | Workspace name. Might be useful to aggregate dataflow metrics by workspaces |

| datasource_title | column | Active | good_for_adhoc_analytics | Data source name. Useful to track dataflow metrics over datasources |

| datasource_name | column | In progress | technical_field | Data source internal name |

| datasource_account_name | column | Active | good_for_adhoc_analytics | Data source account name. Useful to track dataflow metrics over datasource accounts |

| dts_agency_whitelabel_host | column | In progress | technical_field | Agency white-label host |

| dts_agency_data_delivery_eta_utc | column | In progress | technical_field | Expected data delivery time in UTC |

| dts_agency_data_delivery_timezone | column | In progress | technical_field | Agency data delivery timezone |

| dts_agency_delivery_eta_time_client_time_zone_str | column | In progress | technical_field | Delivery time string in client's timezone |

| dts_agency_data_delivery_timezone_utc_offset | column | In progress | technical_field | Agency’s timezone offset from UTC |

| dataflow_schedule_time_string | column | In progress | technical_field | String representation of scheduled time (technical) |

| dataflow_run_schedule_item_time | column | In progress | technical_field | Scheduled item time (technical) |

| dataflow_run_event_inserted_rows | column | In progress | technical_field | Number of rows inserted into data table by data flow event |

| dataflow_run_event_updated_rows | column | In progress | technical_field | Number of rows updated in data table by data flow event |

| dataflow_run_duration_seconds | column | Active | good_for_adhoc_analytics | Dataflow run execution duration in seconds. This is cumulative metric for dataflow run, so it make sense to use with aggregation functions like max(@dataflow_run_duration_seconds) or argMax(@dataflow_run_duration_seconds, @dataflow_run_event_time) and group by @dataflow_run_id |

| dataflow_run_delay_after_eta_seconds | column | Active | good_for_adhoc_analytics | Dataflow run’s delay after the expected time in seconds. Useful to track data latency over dataflows select @dataflow_id, avg(@dataflow_run_delay_after_eta_seconds) as avg_dataflow_latency from @src_improvado_dataflow_run_events where @is_dataflow_run_complete group by @dataflow_id |

| is_dataflow_run_complete | column | Active | good_for_adhoc_analytics | Data flow completion flag (1 - completed, 0 - not completed). Useful to filter dataflow run events or aggregate statuses over dataflows or dataflow runs select @dataflow_id, max(@dataflow_run_event_time, @is_dataflow_run_complete) as last_completed_at from @src_improvado_dataflow_run_events |

| use_workspace_separate_schemas | column | In progress | technical_field | Flag for using separate database for workspace |

Improvado team is always happy to help with any other questions you might have! Send us an email.

Contact your Customer Success Manager or raise a request in Improvado Service Desk.

From data to insights, automate and activate your marketing reporting with Al.

.png)

.png)

.png)

.png)

.png)

.png)

.png)